Understanding the Sources of AI Bias

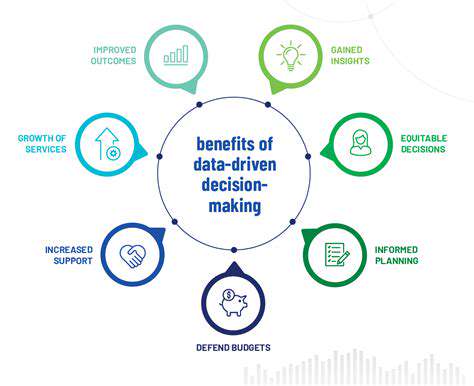

AI systems, trained on vast datasets, can inadvertently inherit and amplify existing societal biases. These biases can stem from various sources, including the data itself, the algorithms used to train the models, and even the developers' own unconscious biases. For example, if a facial recognition system is trained predominantly on images of light-skinned individuals, it may perform less accurately on darker-skinned individuals. Understanding these diverse sources of bias is crucial for effective detection and mitigation strategies, recognizing that a single source isn't the sole culprit but rather a complex interplay of factors.

Furthermore, biases can manifest in different ways, impacting various aspects of the AI system. Consider a loan application system that historically denied loans to individuals from certain demographic groups. This bias, embedded in the algorithm, could perpetuate existing inequalities, potentially leading to discriminatory outcomes. Recognizing these manifestations and their potential impact is paramount in the development of robust solutions.

Developing Effective Detection Methods

Identifying bias in AI systems requires a multi-pronged approach, moving beyond superficial assessments. This involves employing various techniques such as statistical analysis to scrutinize the data for patterns indicative of bias. Tools and techniques should be developed to measure the performance disparities of the AI system across different demographic groups. This process should also extend to assessing the model's predictions and outcomes to look for discrepancies and potential biases embedded within the decision-making process.

Beyond statistical analysis, qualitative methods, such as user feedback and expert reviews, can also provide valuable insights. Gathering feedback from diverse groups can expose potential blind spots in the system. Expert reviews, drawing on domain expertise and ethical considerations, can help identify biases that might be missed by purely quantitative approaches. It is important to remember that multiple detection methods are necessary to provide a comprehensive understanding of the potential biases present in an AI system.

Implementing Mitigation Strategies

Once bias is detected, implementing effective mitigation strategies is crucial. This might involve re-training the AI model on more diverse and representative datasets. For example, incorporating a wider range of images into the facial recognition system's training data can improve its performance across different demographics. Furthermore, careful consideration should be given to the algorithm design itself, looking for ways to mitigate bias through algorithmic modifications and adjustments.

Addressing bias also requires a commitment to ethical guidelines and principles throughout the entire AI lifecycle. This includes establishing clear guidelines for data collection, model development, and deployment. Continuous monitoring and evaluation of the AI system's performance after deployment are also critical to identify and address any emerging biases that may arise over time. This proactive approach is essential for building trustworthy and equitable AI systems.

The Role of Human Oversight and Transparency

Ultimately, human oversight and transparency are essential components in mitigating AI bias. Human review of the AI system's decisions can help identify and correct any problematic outputs. Transparency in the AI system's decision-making process can help build trust and allow for scrutiny of the underlying logic and algorithms. Furthermore, clear communication of the limitations and potential biases of the system to users is paramount to responsible deployment and use.

Establishing clear lines of accountability and responsibility within the development and deployment process is also vital. This involves having designated individuals or teams responsible for monitoring and addressing AI-related biases. This structured approach is crucial for building accountability and ensuring that AI systems are used in a responsible and equitable manner. This multifaceted approach is critical to ensuring fairness and mitigating the potential for harm.

Statistical Methods for Bias Detection

Understanding Bias in Data

Statistical methods are crucial for identifying and mitigating bias in data. Bias can manifest in various forms, from subtle sampling errors to overt discriminatory practices. Understanding these biases is essential for developing accurate and reliable conclusions from data analysis. Recognizing the potential for bias in data collection and analysis is the first step in ensuring fairness and objectivity in research and decision-making.

Bias can stem from a multitude of sources. It can creep into data through flawed sampling techniques, leading to skewed representation of the population. Furthermore, the very design of a study can unintentionally introduce bias. Awareness of these potential sources of bias is paramount to evaluating the trustworthiness of any statistical analysis.

Sampling Techniques and Bias

Proper sampling is paramount in statistical analysis. Random sampling techniques are vital for minimizing bias and ensuring that the sample accurately reflects the population of interest. Employing stratified or cluster sampling can further refine the accuracy of the sample, especially when dealing with diverse populations. These techniques help ensure generalizability of findings beyond the specific sample studied.

Poor sampling methods, such as convenience sampling or purposive sampling, can introduce bias. These methods might overrepresent or underrepresent specific groups within the population, leading to inaccurate conclusions. Understanding the limitations of various sampling methods is critical for interpreting the results of any statistical analysis properly.

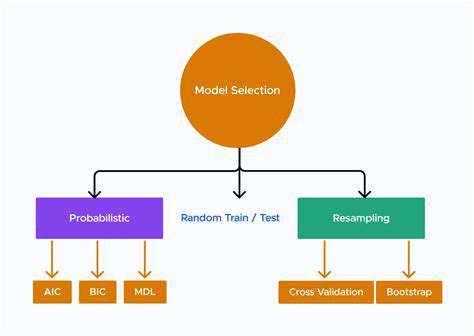

Regression Analysis and Potential Biases

Regression analysis is a powerful tool for modeling relationships between variables. However, it's essential to be cognizant of potential biases that can arise in this process. Omitted variable bias can significantly distort the estimated relationship between variables. This occurs when a crucial variable that influences both the dependent and independent variables is not included in the model.

Multicollinearity, a high correlation among independent variables, can also lead to instability in regression coefficients. Understanding these potential biases is crucial for interpreting regression results accurately and drawing meaningful conclusions.

Data Cleaning and Bias Reduction

Data cleaning plays a vital role in mitigating bias in statistical analyses. Identifying and handling missing data is critical because missing values can introduce bias, potentially leading to skewed results. Careful consideration of imputation methods for missing values is essential for maintaining the integrity and reliability of the analysis.

Outliers can also skew results. Identifying and addressing outliers is important. Sometimes, outliers might indicate genuine anomalies, and other times they reflect errors in the data collection process. Appropriate methods for handling these discrepancies help maintain the accuracy of the results.

Statistical Significance vs. Practical Significance

Statistical significance, while important, doesn't always equate to practical significance. A statistically significant result might not necessarily have a meaningful impact in the real world. This distinction is often overlooked in data analysis, leading to potentially misleading conclusions.

Consideration of effect size and practical implications is essential for a comprehensive understanding of the findings. Statistical significance should be interpreted in conjunction with the magnitude of the effect being observed.

Ethical Considerations in Statistical Analysis

Ethical considerations are paramount in any statistical analysis. Researchers must be mindful of potential biases and strive to minimize their impact on the results. Ensuring that data collection and analysis are conducted with integrity and transparency is crucial.

Transparency in methodology and data handling is essential for building trust and ensuring the reliability of the findings. Openness and honesty about the limitations and potential biases in the study are vital for responsible data interpretation. This promotes confidence in the results and facilitates their appropriate application.

Advanced Statistical Techniques for Bias Detection

Advanced statistical techniques can provide more sophisticated methods for detecting and mitigating bias. Machine learning algorithms, for instance, can be used to identify patterns and potential biases in data that might be missed by traditional methods. These algorithms can analyze large datasets and uncover hidden correlations and relationships.

Techniques like propensity score matching can also be employed to adjust for confounding factors and reduce bias in observational studies. These advanced methods can lead to more precise and nuanced insights into complex phenomena.

Algorithmic Auditing and Explainability

Algorithmic Bias Detection

Identifying and mitigating bias in algorithms is crucial for ensuring fairness and equitable outcomes. Algorithmic bias often stems from the data used to train the model, reflecting societal biases present in that data. This bias can manifest in various ways, from discriminatory loan applications to skewed hiring recommendations. Careful analysis of the training data and the algorithm's output is essential to detect and correct these biases, which often require iterative improvements and adjustments to the model's parameters.

Methods for detecting bias include statistical analysis of the data, comparing model performance across different demographic groups, and using fairness-aware evaluation metrics. These techniques help pinpoint areas where the algorithm exhibits disparities and guide the development of more equitable solutions.

Explainable AI (XAI) Techniques

Explainable AI (XAI) methods aim to provide insights into the decision-making process of complex algorithms. This transparency is essential for building trust and understanding the rationale behind algorithmic outputs. XAI techniques range from simple feature importance analysis to more sophisticated methods like rule-based explanations and visualization tools. These techniques help reveal the contributing factors to a specific output, which helps to identify potential errors or biases in the model.

One crucial aspect of XAI is to ensure that the explanations are understandable to non-experts, such as domain specialists or regulatory bodies. Clear and concise explanations are vital for effective communication and decision-making.

Auditing Frameworks and Standards

Establishing robust auditing frameworks and standards is vital for ensuring the reliability and trustworthiness of algorithmic systems. These frameworks should encompass the entire lifecycle of an algorithm, from data collection and preprocessing to model training, deployment, and monitoring. A comprehensive framework should include specific guidelines for data quality assessments, bias detection, and explainability. This approach fosters consistency and helps organizations adopt best practices for algorithmic governance.

Clear documentation and reporting mechanisms are also necessary components of these frameworks. This enables stakeholders to track the performance and impact of algorithms over time and identify potential issues proactively.

Regulatory Compliance and Ethical Considerations

Algorithmic auditing is becoming increasingly important due to growing regulatory requirements and ethical concerns surrounding automated decision-making. Organizations are required to demonstrate compliance with regulations related to data privacy, fairness, and transparency. Meeting these requirements necessitates a rigorous approach to auditing the algorithms and ensuring responsible use. Moreover, ethical considerations extend beyond regulatory compliance, encompassing the potential societal impacts of algorithmic decisions.

Careful consideration of potential harms and unintended consequences is crucial. Ethical considerations should be integrated into the design and development process of algorithms from the outset.