Understanding Algorithmic Bias

Algorithmic bias, a growing concern in machine learning systems, emerges when the underlying data contains inherent prejudices. These biases often mirror societal inequalities, causing algorithms to reinforce discriminatory patterns in their outputs. From mortgage approvals to job candidate screening, biased datasets can lead to unfair outcomes across critical sectors. Recognizing how these biases originate is the first step toward creating more just artificial intelligence.

Many developers overlook the subtle ways historical injustices become embedded in algorithmic decision-making. Even with neutral intentions, systems trained on skewed data will reproduce those imbalances. This underscores why rigorous bias auditing must precede any large-scale AI implementation.

The Unequal Burden of Biased Systems

Marginalized communities frequently bear the brunt of flawed algorithms. Racial minorities, women, and economically disadvantaged groups face disproportionate barriers when automated systems incorporate prejudiced data patterns. A loan approval algorithm might deny qualified applicants based on zip code data that correlates with race, perpetuating financial exclusion.

These issues extend far beyond banking. Court sentencing tools, medical diagnostic systems, and even educational software can all incorporate biases that disadvantage vulnerable populations. Recognizing these varied impacts enables more focused solutions.

How Data Gathering Perpetuates Bias

The foundation of any algorithmic system - its training data - often carries the seeds of bias. Historical records, social media activity, and even corporate customer demographics frequently reflect societal prejudices. Without deliberate intervention, these datasets will produce skewed algorithmic outputs.

Data collection methods themselves can introduce distortion. Survey samples might overrepresent urban populations, while sensor data could exclude people with certain physical characteristics. Thoughtful dataset construction requires awareness of these potential pitfalls.

Creating Fairer Algorithmic Systems

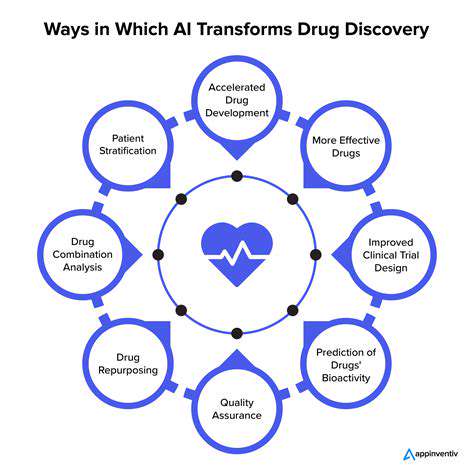

Countering algorithmic bias demands a comprehensive strategy. This includes implementing robust data auditing procedures, utilizing diverse training datasets, and developing algorithms specifically designed to identify and correct biases. Equally important are ethical guidelines that prioritize equitable outcomes throughout the AI lifecycle.

Effective frameworks must emphasize continuous monitoring, allowing for real-time bias detection and correction. Regular impact assessments help ensure systems remain fair as they evolve.

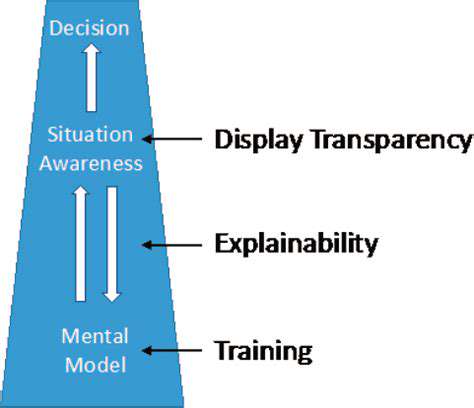

Transparency as a Cornerstone of Trust

Opaque algorithms erode public confidence in automated systems. When users can't understand how decisions are reached, they can't verify the absence of bias. Detailed explanations of algorithmic processes enable necessary scrutiny while fostering wider acceptance of AI tools.

The Power of Diverse Development Teams

Homogeneous engineering teams often overlook biases that affect groups outside their experience. Including developers, ethicists, and domain experts from varied backgrounds creates systems that better serve diverse populations. This approach leads to more thorough bias identification and more inclusive design choices.

Continuous Learning for Better Algorithms

As AI systems grow more sophisticated, so too must our approaches to fairness. Ongoing research into bias detection methods and comprehensive ethics training for developers are both essential. This sustained effort helps ensure artificial intelligence promotes equality rather than undermining it.

Data Privacy and Security: Safeguarding Student Information

Principles of Minimal Data Collection

Data minimization represents a fundamental privacy protection strategy. By collecting only essential information for defined purposes, institutions dramatically reduce privacy risks. This approach not only enhances security but also aligns with ethical data stewardship principles. Clear documentation should justify each data element collected and its specific uses.

Implementing Strong Security Protections

Modern educational systems require multilayered security measures. Advanced encryption, strict access controls, and continuous monitoring form the backbone of effective data protection. Regular security testing and comprehensive staff training help maintain robust defenses against evolving threats.

Building Privacy into Systems

Privacy considerations should inform every stage of system design, not get added as an afterthought. Incorporating features like automatic data expiration and role-based access from the initial architecture creates inherently more secure environments. Emerging technologies like homomorphic encryption offer promising ways to analyze data while preserving individual privacy.

Meeting Legal and Ethical Standards

Compliance with regulations like GDPR and FERPA provides the baseline for responsible data handling. Transparent privacy policies should clearly explain data practices to students and families. Equally important are well-defined procedures for responding to potential breaches, helping to maintain community trust when issues arise.