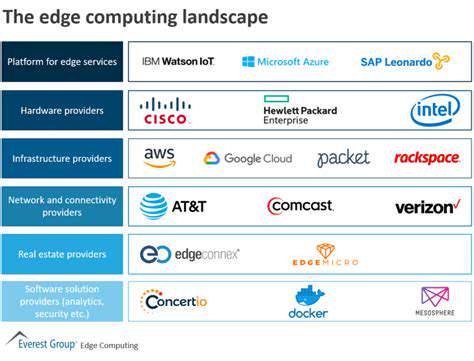

The Expanding Landscape of Edge Computing

The Rise of Edge Computing

Modern data processing is undergoing a radical transformation through edge computing. Rather than funneling all information to centralized cloud servers, this approach handles data near its origin—be it industrial sensors, urban surveillance systems, or personal gadgets. The immediate processing capability slashes delays dramatically, creating systems that respond instantly to changing conditions.

What's fueling this decentralized revolution? The explosive growth of interconnected smart devices generating torrents of real-time data. Industries like self-driving vehicles, factory robotics, and intelligent energy grids demand instantaneous, fail-safe processing—requirements that traditional cloud setups struggle to meet consistently.

Key Applications of Edge Computing

Across industrial sectors, edge solutions are delivering game-changing capabilities. Manufacturing plants now spot equipment irregularities the moment they occur, preventing breakdowns before they happen. The financial impact? Dramatically lower maintenance expenses and near-perfect operational uptime.

Healthcare providers leverage edge technology to monitor patients remotely, enabling life-saving interventions regardless of location. For rural communities or individuals managing chronic illnesses, this localized processing power can mean the difference between timely care and dangerous delays.

Enhanced Security and Privacy

Edge architecture introduces powerful security advantages by minimizing data travel. When sensitive information gets processed locally rather than traversing networks, exposure to potential breaches plummets. Each edge node becomes its own secure enclave, drastically reducing the attack surface that hackers can exploit.

This localized approach also addresses growing privacy concerns. With stringent regulations like GDPR, keeping data close to its source simplifies compliance while building consumer trust in how their information gets handled.

Overcoming Cloud Limitations

While cloud computing revolutionized data storage, its inherent constraints become apparent in latency-sensitive scenarios. Edge computing emerges as the perfect complement—handling time-critical processing locally while still integrating with cloud systems for heavy analytics. This hybrid approach creates a resilient infrastructure that's greater than the sum of its parts.

The bandwidth savings alone make a compelling case, as edge nodes filter and process data before transmitting only what's essential to central systems.

Scalability and Flexibility

Edge networks shine in their ability to grow organically. Enterprises can deploy additional nodes exactly where needed, creating tailored solutions for specific operational requirements. From retail inventory sensors to offshore drilling monitors, the modular nature of edge deployments allows precise customization.

This adaptability proves invaluable as technology evolves. New edge devices can integrate seamlessly into existing networks, future-proofing infrastructure investments against coming innovations.

The Future of Edge Computing

We're witnessing merely the infancy of edge technology. As AI capabilities migrate to edge devices, they'll make autonomous decisions without cloud dependency. Imagine traffic lights that self-optimize flow or drones that navigate complex environments independently—all powered by edge-based intelligence.

The shrinking size and cost of edge hardware will democratize access, enabling small businesses and even individuals to harness capabilities once reserved for tech giants. This widespread adoption will spark an explosion of creative applications we can scarcely imagine today.

Key Challenges in Edge Device Management

Device Discovery and Inventory

Maintaining an accurate, real-time registry of edge devices presents an ongoing challenge. These distributed systems require automated discovery protocols that track not just device presence but detailed configurations. Without this visibility, administrators operate blind to their network's true composition and vulnerabilities.

The solution lies in self-reporting architectures where devices continuously update their status, creating a living inventory that adjusts automatically as the network evolves.

Security Management at the Edge

Each edge device represents a potential entry point for attackers. Traditional perimeter security models break down in distributed environments, demanding innovative approaches to protection. Zero-trust architectures gain traction here, verifying every access request regardless of origin.

Firmware updates present particular challenges—ensuring timely security patches reach thousands of geographically dispersed devices requires robust, automated deployment systems with fail-safe verification.

Scalability and Performance

As edge networks expand, management systems must scale exponentially without performance degradation. The secret lies in hierarchical architectures where local controllers handle routine operations, only escalating exceptional situations to central systems.

Latency becomes critical—management commands must reach edge devices nearly instantly, even when controlling thousands of nodes across continents.

Data Management and Analytics

The data deluge from edge sensors threatens to overwhelm traditional analysis methods. Edge analytics solutions now pre-process information locally, sending only distilled insights to central systems. This approach preserves bandwidth while ensuring decision-makers receive the most relevant information.

Machine learning models deployed at the edge can identify patterns in real-time, triggering immediate responses without waiting for human analysis.

Remote Management and Maintenance

Physical access to edge devices often proves impractical. Robust remote management capabilities become essential—from diagnostics to configuration changes. Virtual maintenance tunnels allow technicians to troubleshoot devices halfway around the world as if they were on-site.

Predictive maintenance algorithms analyze device telemetry to schedule service precisely when needed, avoiding both premature replacements and unexpected failures.

Interoperability and Integration

The heterogeneous nature of edge ecosystems—with devices from multiple vendors using various protocols—creates integration headaches. Standardization efforts like OpenFog and industrial IoT frameworks help, but practical solutions often require custom middleware.

APIs become the glue binding disparate systems, with well-documented interfaces allowing different components to exchange data seamlessly.

Resource Allocation and Optimization

Edge devices frequently operate with constrained resources—limited power, memory, or processing capacity. Intelligent resource management ensures critical functions always have what they need, dynamically adjusting allocations based on real-time demands.

Techniques like containerization allow multiple applications to share edge hardware efficiently, isolating processes while maximizing utilization.

Orchestration Strategies for Effective Edge Management

Orchestration Strategies for Effective Project Management

Mastering edge environments requires symphony-like coordination. Successful orchestration blends automated workflows with human oversight, creating systems that adapt fluidly to changing conditions. The payoff? Networks that maintain peak performance despite complexity.

Defining Clear Roles and Responsibilities

In distributed edge systems, ambiguity breeds chaos. Each component—whether hardware or software—requires explicit parameters defining its functions and boundaries. This clarity prevents resource conflicts and ensures seamless interoperability across the network.

Implementing Robust Communication Protocols

Edge ecosystems demand communication frameworks that are both lightweight and resilient. Message queues and publish-subscribe models enable efficient data exchange between devices, while blockchain-inspired techniques verify message integrity across untrusted networks.

Utilizing Agile Methodologies for Flexibility

The rapid evolution of edge technologies necessitates iterative development approaches. Modular architectures allow components to upgrade independently, preventing system-wide disruptions during updates. Continuous integration pipelines ensure new capabilities deploy smoothly across diverse edge environments.

Employing Project Management Software

Specialized orchestration platforms provide the central nervous system for edge networks. These solutions offer real-time visibility into thousands of nodes, with dashboards highlighting anomalies and performance trends. Automation rules handle routine adjustments while flagging situations requiring human judgment.

Proactive Risk Management and Contingency Planning

In distributed systems, failures become inevitable—the goal shifts to graceful degradation. Intelligent orchestration includes failover mechanisms where backup nodes activate automatically, with traffic rerouting seamlessly around problem areas. Chaos engineering techniques proactively test these contingencies, revealing weaknesses before crises occur.

Leveraging Automation and AI for Enhanced Efficiency

Automating Network Management Tasks

Modern networks achieve reliability through intelligent automation. Routine tasks like configuration management and performance tuning now occur autonomously, freeing engineers for strategic work. These systems develop institutional knowledge over time, continuously refining their operations.

AI-Powered Predictive Maintenance

Machine learning models digest equipment telemetry to forecast maintenance needs with startling accuracy. Vibration patterns, thermal signatures, and power consumption trends combine to predict failures weeks in advance. The result? Maintenance transitions from reactive to predictive, with interventions scheduled during planned downtime.

Enhanced Security Through Machine Learning

AI security systems establish behavioral baselines for networks, then flag anomalies in real-time. These systems detect novel attack patterns that rule-based solutions miss, adapting as threats evolve. Cryptographic techniques ensure the AI models themselves remain secure against tampering.

Optimizing Resource Allocation with AI

Reinforcement learning algorithms dynamically adjust network resources, learning optimal configurations through continuous experimentation. These systems balance competing demands—streaming video versus VoIP calls, for instance—allocating bandwidth where it creates maximum value.

Improved User Experience through Intelligent Routing

AI-driven routing considers hundreds of variables—from network congestion to application requirements—choosing paths that minimize latency and packet loss. During outages, these systems improvise alternative routes, often maintaining service despite multiple points of failure.

Scalability and Flexibility of Automated Networks

Self-configuring networks represent the next evolution. New devices authenticate and integrate automatically, with the network adjusting topology and policies to accommodate them. This plug-and-play capability makes scaling effortless, whether adding single nodes or entire data centers.

Real-time Monitoring and Troubleshooting

AI correlation engines process millions of events per second, distinguishing meaningful patterns from noise. When issues arise, these systems often resolve them autonomously—restarting services, adjusting configurations, or failing over to backup systems—before users notice any disruption.