Data Collection and Feature Engineering for AI Models

Data Acquisition Strategies

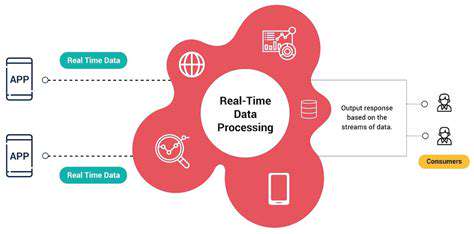

Effective data collection is paramount to any successful machine learning project. Choosing the right data acquisition strategies is crucial for ensuring the quality and relevance of the data used for training and evaluation. This involves a careful consideration of the data source, the data format, and the data volume. Data quality directly impacts the accuracy and reliability of the resulting model. Poor data quality can lead to inaccurate predictions and ultimately, a less effective model.

Different data sources, such as databases, APIs, web scraping, and sensor readings, each have their own strengths and weaknesses. Understanding these nuances is essential for selecting the appropriate method for extracting the required data. The chosen strategy should also account for potential biases in the data, as these can significantly impact model performance.

Feature Engineering Techniques

Feature engineering is the process of transforming raw data into features that better represent the underlying problem and improve the performance of machine learning models. This involves selecting, creating, and modifying features to enhance the model's ability to learn patterns and relationships within the data. This crucial step often involves domain expertise and a deep understanding of the problem domain.

Various techniques exist for feature engineering, including scaling, normalization, one-hot encoding, polynomial expansion, and feature selection. Selecting the right techniques depends on the specific nature of the data and the desired outcome. For example, scaling numerical features can prevent features with larger values from dominating the model's learning process.

Data Preprocessing

Data preprocessing is a critical step in the machine learning pipeline, responsible for preparing the data for model training. This involves handling missing values, outliers, and inconsistencies in the data. Proper preprocessing ensures that the model receives clean and reliable data, leading to more accurate and robust predictions.

Techniques like imputation for missing values, outlier detection and removal, and data transformation (e.g., log transformation) are commonly used in data preprocessing. These steps help to ensure that the data is in a suitable format for the chosen machine learning algorithms.

Handling Missing Values

Missing values are a common problem in datasets. Appropriate strategies for handling these missing values are essential for accurate model training. Simple methods like deletion of rows or columns containing missing values might not be optimal. More sophisticated methods, such as imputation, can often provide a more effective solution.

Various imputation techniques exist, including mean/median imputation, k-nearest neighbors imputation, and more advanced methods. Choosing the right imputation method depends on the nature of the missing data and the characteristics of the dataset.

Outlier Detection and Treatment

Outliers can significantly skew the results of machine learning models. Identifying and addressing these outliers is crucial for building robust and reliable models. Outliers can arise from various sources, including measurement errors, data entry mistakes, or simply unusual data points.

Various methods exist for detecting outliers, ranging from simple visual inspection to statistical methods like the Interquartile Range (IQR) method and Z-score method. Once detected, outliers can be handled through methods like capping, winsorization, or removal, depending on the specific context and the characteristics of the data.

Feature Selection

Feature selection is a critical step in reducing the dimensionality of the data and improving model performance. By selecting the most relevant features, we can avoid overfitting and improve the efficiency of the model. Selecting the right features is often a trade-off between model performance and computational complexity.

Various feature selection techniques exist, including filter methods, wrapper methods, and embedded methods. The choice of method depends on the specific problem and the characteristics of the data. These methods can significantly reduce the number of features, leading to faster training times and potentially improved model generalization.

Data Transformation

Data transformation techniques are used to convert data into a format that is more suitable for machine learning algorithms. This can involve scaling, normalization, or other transformations to improve the model's ability to learn patterns. Proper data transformation can improve model performance and stability.

Examples of such transformations include log transformations, standardization, and normalization. These transformations can help to address issues such as skewed distributions and differing scales of features, which can negatively impact model performance. Careful consideration of which transformation to apply is crucial.