Prioritizing Fairness and Inclusivity in AI-Powered Learning Platforms

Ensuring Equitable Access to AI-Powered Learning

Educational platforms powered by artificial intelligence present significant opportunities to make learning more accessible to diverse populations. These systems can customize instruction and provide targeted assistance to meet individual needs. However, realizing this potential fully demands attention to critical factors like internet access, availability of devices, and language differences. Neglecting these aspects risks widening educational disparities, creating a system where socioeconomic factors influence learning opportunities more than individual potential. Developing comprehensive strategies to overcome these challenges is essential to harness AI's transformative power for all students.

Building reliable infrastructure, including affordable internet and accessible devices, forms the foundation for equal access. Additionally, platforms should accommodate various learning preferences and language requirements through multilingual options and adaptable formats. This thoughtful approach can transform AI learning tools into genuine instruments for inclusive education, creating equal possibilities for every learner.

Reducing Prejudice in Algorithmic Systems

Machine learning systems train on extensive datasets that may contain societal prejudices, potentially reinforcing and intensifying these biases. This could result in unfair treatment within educational platforms, including skewed recommendations, inappropriate material delivery, or unequal evaluation results. Scrutinizing data sources and algorithm design becomes critical to minimizing these risks and promoting fairness in education.

To counteract bias, developers must actively pursue varied and representative data, thoroughly examine algorithms for potential prejudice, and establish procedures to identify and rectify unfair results. Ongoing observation and assessment of platform performance are vital for maintaining fairness and pinpointing areas needing enhancement.

Enhancing Clarity and Understandability in AI Systems

Educators and students benefit from understanding how AI learning platforms generate recommendations and decisions. Clear explanations of algorithmic processes build confidence and facilitate better comprehension of the learning experience. Techniques that make AI decision-making more interpretable enable users to follow the system's reasoning and spot potential errors or biases. This capability allows teachers to modify learning paths when necessary, enhancing the overall educational experience.

Encouraging Cooperation Between Educators and AI

Artificial intelligence should function as an enhancement to, not a replacement for, human teachers. Effective AI learning environments emphasize collaboration between educators and technology, enabling teachers to concentrate on personalized guidance, developing analytical skills, and offering emotional assistance. Combining human expertise with AI capabilities creates a more sophisticated and successful educational approach, ensuring students receive optimal support from both human mentors and technological tools.

Creating Ethical Frameworks for Educational AI

The creation and implementation of AI learning platforms require clear ethical guidelines addressing data privacy, algorithmic fairness, transparency, and responsibility. Establishing a comprehensive ethical structure helps guarantee that educational AI technologies are used properly and ethically, protecting the rights of both learners and educators.

Implementing Responsibility and Review Processes

Creating accountability measures and oversight procedures ensures ethical and responsible use of AI learning platforms. Independent evaluations of algorithms, datasets, and overall platform performance can reveal and address potential biases or weaknesses. Defining clear responsibilities for platform development and operation promotes transparency and sustains confidence in the technology.

Ongoing Assessment of AI Learning Platforms

Continuous evaluation of AI learning systems helps identify and resolve emerging concerns. Regular analysis of platform performance, user feedback, and educational outcomes maintains fairness, inclusivity, and effectiveness. This persistent feedback cycle ensures platforms stay relevant, responsive, and aligned with evolving educational requirements and standards.

Addressing Bias in AI-Driven Assessment and Feedback

Identifying the Origins of Bias

Machine learning systems, especially those trained on massive datasets, can unintentionally adopt and magnify existing societal prejudices present in the data. This represents a serious concern because such biases may lead to unjust outcomes in critical areas like employment screening, loan approvals, and legal proceedings. For instance, if training data primarily contains images of individuals with lighter skin tones, the resulting system might have difficulty accurately processing images of people with darker complexions, potentially producing skewed results. Recognizing the possibility of data bias represents a crucial step in developing ethical AI systems. Prejudice can originate from multiple sources, including historical data reflecting social inequities or inherent limitations in the algorithms.

Methods of data collection significantly influence the introduction or amplification of bias. When datasets fail to represent the diverse populations they serve, the resulting AI systems will likely mirror these deficiencies. For example, medical diagnostic AI trained primarily on data from one demographic group may provide less accurate results for patients from other backgrounds. This lack of diversity in training information can result in incorrect medical assessments and unequal treatment opportunities.

Counteracting Bias in AI Development

Combating bias in artificial intelligence requires a comprehensive strategy involving careful data selection, thoughtful algorithm design, and continuous evaluation. A critical initial step involves thorough examination of training data to identify and address potential bias sources. This includes ensuring datasets adequately represent the populations they will serve. Additionally, algorithms should be designed to recognize and compensate for potential data biases, possibly through fairness constraints or techniques to detect and correct biased outputs.

Consistent and rigorous testing remains essential for discovering and correcting biases as they emerge. This process involves evaluating system performance across different demographic groups and situations to ensure equitable and accurate results for all users. Monitoring system outputs over time helps identify developing biases that may not have been apparent during initial training. The ultimate objective involves creating AI systems that benefit everyone fairly and equitably.

Methods for Identifying and Correcting Bias

Various techniques exist for detecting and addressing bias in AI systems. One approach utilizes fairness metrics to quantify bias levels in system outputs. These metrics compare results across different demographic groups to identify disparities and guide corrective measures. Additionally, auditing the entire AI development process helps ensure bias consideration and mitigation at each stage, from data gathering to implementation.

Another effective strategy involves adversarial training methods. This technique trains AI systems using data specifically designed to reveal potential biases. By exposing the system to these challenging examples, it learns to adjust its decision-making to reduce prejudice. These methods, combined with continuous monitoring, prove essential for developing AI systems that are both precise and fair.

Creating and applying these strategies remains vital for ensuring AI systems operate fairly and equitably, avoiding the reinforcement or exaggeration of existing societal prejudices. This represents an ongoing process demanding constant attention and adaptation as new information and insights become available.

Promoting Human-Centered Design in AI-Enhanced Learning Environments

Focusing on User Requirements

Human-focused design emphasizes understanding and addressing end-user needs, preferences, and constraints. This process involves active user participation throughout design stages, from initial research to final testing. By concentrating on the individuals who will use the product or service, designers can develop solutions that truly meet user needs and deliver effective results.

Deep comprehension of user requirements forms the foundation for successful design. This extends beyond collecting basic demographic information and preferences. It necessitates thorough qualitative research, including interviews and usability testing, to uncover the fundamental motivations, challenges, and desires that shape user behavior.

Understanding Diverse User Perspectives

A crucial element of human-centered design involves recognizing and valuing user diversity. This includes considering various backgrounds, abilities, cultures, and experiences. Designers should create inclusive solutions that serve everyone's needs, not just a specific subset of users.

Acknowledging and appreciating diverse viewpoints is fundamental for developing equitable and accessible products. Including individuals from different backgrounds in the design process helps avoid biases and ensures the final product appeals to a wider audience.

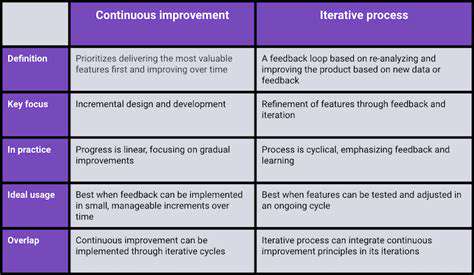

Cyclical Design and Evaluation

Human-centered design follows an iterative approach. It incorporates continuous feedback cycles where designers test their concepts with users, collect responses, and refine the design accordingly. This repetitive process ensures the design progressively better meets user needs.

This ongoing cycle of testing and refinement proves essential for identifying potential problems and making necessary improvements. Incorporating user feedback at each stage enables designers to create solutions that are not only well-conceived but also highly usable.

Teamwork and Information Sharing

Successful human-centered design depends significantly on collaboration and clear communication. Designers must work closely with all stakeholders, including engineers, marketing professionals, and project managers, to ensure alignment and shared objectives. Open communication channels remain crucial for keeping all participants informed and coordinated.

Clear and consistent communication promotes understanding and commitment across the project team. This cooperative approach helps maintain project focus and ensures the final product aligns with overall goals and vision.

Ethical Aspects of Design

Human-centered design must always consider the ethical consequences of design choices. Designers should remain aware of potential biases, unintended effects, and the broader societal impact of their work. They should prioritize solutions that enhance well-being and avoid causing harm.

Ethical considerations form an essential component of the design process. This includes protecting user data privacy and security, encouraging inclusivity, and avoiding products that reinforce existing inequalities. Designers bear responsibility for considering the wider social and environmental implications of their creations.