Challenges and Considerations in Robotic RL

Data Processing Complexities

Managing extensive datasets presents substantial computational challenges, particularly regarding memory allocation and processing efficiency. Significant data volumes can overwhelm system capabilities, resulting in performance degradation and potential failures. Mastery of specialized data structures and optimization techniques becomes crucial for maintaining operational stability. Strategic use of advanced packages for data manipulation can dramatically enhance processing speed and resource utilization, while appropriate structural choices ensure data integrity throughout analytical workflows.

Preprocessing represents another critical phase, where data quality directly impacts subsequent analysis validity. Addressing incomplete records requires nuanced approaches tailored to specific data characteristics and analytical requirements. Comprehensive quality assurance protocols must identify and resolve inconsistencies, anomalies, and formatting issues that could compromise result reliability. These preparatory steps form the foundation for accurate, meaningful analytical outcomes.

Model Selection and Analytical Rigor

Choosing appropriate analytical models demands careful consideration of data properties and research objectives. The rich ecosystem of statistical tools available offers diverse capabilities, each with specific operational parameters and theoretical foundations. Inappropriate model selection can generate misleading outputs, potentially invalidating entire analytical efforts. Thorough understanding of underlying assumptions proves essential for aligning methodological choices with data characteristics and study goals.

Interpretation of analytical outputs requires both statistical expertise and contextual knowledge. Effective analysis examines multiple dimensions of model results, including significance measures, uncertainty ranges, and practical implications. Superficial interpretation risks drawing erroneous conclusions that could misguide subsequent decisions or research directions. Comprehensive evaluation frameworks help ensure analytical findings accurately reflect underlying phenomena.

Package Management Complexities

R's extensive package ecosystem offers powerful specialized functionality but introduces dependency management challenges. Version conflicts and compatibility issues can emerge, particularly in complex projects utilizing multiple interdependent packages. Strategic package selection and version control practices help maintain system stability and reproducibility across different computing environments.

Documentation quality and community support significantly influence package usability and implementation success. Clear usage guidelines and active developer communities facilitate effective troubleshooting and optimal implementation. Developing proficiency in diagnosing and resolving package-related issues represents a valuable skill set for maintaining productive analytical workflows.

Computational Optimization Strategies

Computational demands vary substantially based on analytical complexity and dataset scale. System resource awareness enables realistic planning and prevents performance bottlenecks. For particularly intensive analyses, leveraging distributed computing resources or cloud-based solutions may become necessary to achieve practical processing times.

Code optimization techniques offer substantial performance improvements through strategic implementation choices. Vectorization, algorithmic efficiency, and memory management practices can dramatically reduce execution times while maintaining analytical rigor. These optimization approaches become increasingly valuable as analysis complexity and data volumes grow.

Real-World Applications and Future Directions

Industrial and Commercial Implementations

Reinforcement learning is transforming industrial operations through adaptive automation solutions. Practical applications span multiple sectors, addressing challenges in manufacturing, distribution, and service delivery. In logistics operations, RL-enabled systems dynamically optimize routing, inventory management, and material handling, responding to real-time operational variables that traditional programmed systems struggle to accommodate. These adaptive capabilities prove particularly valuable in environments characterized by frequent variability and unexpected disruptions.

Human-robot collaboration represents another area of significant advancement. Through RL, collaborative robotic systems develop nuanced interaction protocols that enhance workplace safety while maintaining operational efficiency. This proves especially beneficial in complex assembly processes where human dexterity combines with robotic precision to achieve superior manufacturing outcomes.

Advanced Mobility and Dexterity

Navigation and manipulation capabilities benefit substantially from RL's adaptive learning approach. Systems can develop sophisticated movement strategies that account for dynamic environmental factors and unexpected obstacles. Beyond basic mobility, RL enables refined object interaction skills - grasping irregular shapes, applying appropriate force levels, and performing delicate assembly operations with human-like precision.

This adaptive capability proves invaluable in unstructured environments where pre-programmed solutions lack the flexibility to handle real-world variability effectively.

Environmental Exploration and Analysis

Autonomous exploration systems leverage RL to efficiently map and analyze uncharted territories. These systems develop intelligent survey strategies that maximize information gain while minimizing resource expenditure. Applications range from planetary exploration to hazardous environment inspection, where autonomous adaptability significantly enhances mission success probabilities.

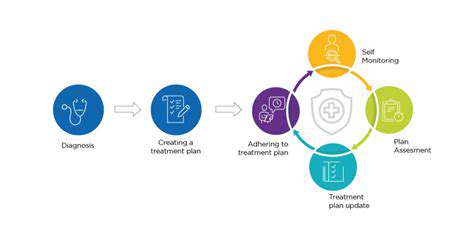

Healthcare Innovations

The medical field stands to benefit substantially from RL-enabled robotic systems. Surgical assistance applications demonstrate particular promise, where adaptive systems can compensate for biological variability and enhance procedural precision. Rehabilitation robotics represents another impactful application area, with systems tailoring therapeutic interventions to individual patient responses and recovery trajectories.

Envision adaptive therapy systems that continuously adjust treatment parameters based on real-time patient feedback and progress metrics. Such personalized approaches could revolutionize rehabilitation outcomes while minimizing discomfort and optimizing recovery timelines.

Adaptive System Capabilities

RL's fundamental strength lies in its capacity to accommodate changing operational conditions. Systems can modify behaviors in response to environmental shifts, equipment variations, or task requirement changes. This dynamic adaptability proves essential for maintaining performance in real-world settings where static programmed solutions often fail.

This flexibility enables systems to handle operational anomalies - from sudden equipment failures to unexpected environmental changes - with minimal disruption to overall functionality.

Safety-Centric Design

Operational safety represents a critical consideration in RL system development. Through careful reward structuring and constraint integration, systems learn to prioritize safe operation while pursuing performance objectives. This balance proves particularly important in applications involving close human interaction or operation in sensitive environments.

Thoughtful safety protocols embedded within the learning framework ensure systems develop not only effective but responsible behavioral patterns appropriate for their operational contexts.

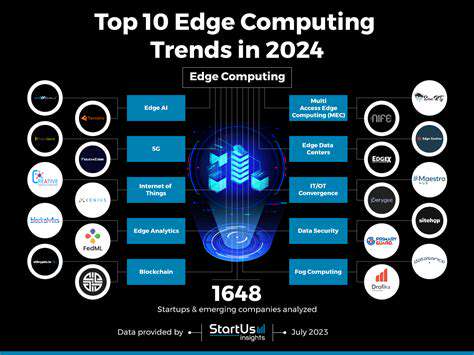

Emerging Opportunities and Open Challenges

The RL field continues to evolve rapidly, with ongoing research addressing key limitations and expanding application possibilities. Improving sample efficiency and generalization capabilities remains a priority for enhancing practical usability. Integration with complementary AI disciplines like computer vision and natural language processing opens new avenues for sophisticated multimodal systems. Ethical and regulatory considerations are gaining prominence as autonomous systems become more capable and widely deployed.

Current investigations focus on developing more data-efficient learning paradigms and improving cross-domain adaptability. These advancements aim to make RL solutions more accessible and practical for diverse real-world applications while ensuring responsible development and deployment practices.