Developing Fair and Equitable AI Algorithms

Defining Fairness in AI

Fairness in artificial intelligence (AI) is a multifaceted concept that goes beyond simply avoiding explicit bias. It encompasses a broad range of considerations, including ensuring that AI systems treat all individuals equitably, regardless of their background or characteristics. This involves understanding and mitigating potential biases embedded within the data used to train AI models, as well as the algorithms themselves. A crucial component of fairness is ensuring that the outcomes of AI systems are just and do not perpetuate existing societal inequalities.

A truly fair AI system must consider the diverse needs and experiences of all individuals. This requires a nuanced understanding of the potential impacts of AI on different groups and a commitment to ongoing evaluation and adaptation to ensure that systems remain equitable over time.

Data Bias and its Impact

AI systems learn from data, and if that data reflects existing societal biases, the AI system will likely perpetuate those biases. For example, if a dataset used to train a facial recognition system predominantly includes images of people of a certain race or gender, the system may perform less accurately or fairly on individuals from other groups. This inherent bias can have serious consequences, leading to discriminatory outcomes in areas like loan applications, hiring processes, and criminal justice.

Addressing data bias requires careful data collection and curation practices. It also necessitates the development of techniques to identify and mitigate biases in existing datasets. This is a critical step in creating truly fair and equitable AI systems.

Algorithmic Transparency and Explainability

Understanding how AI systems arrive at their decisions is crucial for ensuring fairness. Opaque algorithms, where the decision-making process is not readily understandable, can lead to mistrust and a lack of accountability. If users don't understand why a specific decision was made, it's challenging to identify and rectify biases or errors. This lack of transparency can also lead to a perception of unfairness, even if the algorithm is not inherently biased.

Making AI algorithms more transparent and explainable is essential for building trust and ensuring that decisions are made in a fair and equitable manner.

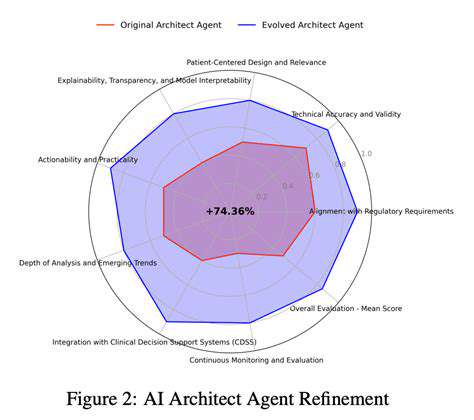

Evaluating and Monitoring AI Systems

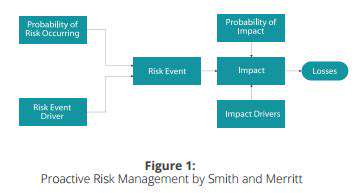

Fairness in AI is not a one-time fix. It requires ongoing evaluation and monitoring of AI systems to ensure that they continue to operate fairly over time. As societal contexts and demographics evolve, AI systems must adapt and adjust to prevent the re-emergence of bias. The ability to adapt and adjust is critical for the long-term effectiveness of AI systems.

Regular audits and evaluations can help identify potential issues and areas where adjustments are needed. These evaluations should involve diverse stakeholders and consider the potential impacts on various groups.

Ethical Frameworks for AI Development

The development of ethical frameworks and guidelines is critical for ensuring that AI systems are designed and deployed with fairness and equity in mind. These frameworks should incorporate principles of fairness, transparency, accountability, and privacy. They should also address the potential societal impacts of AI, aiming to mitigate harm and maximize benefits for all.

Establishing clear ethical standards and guidelines can significantly enhance the trustworthiness and reliability of AI systems and help create a more just and equitable future.

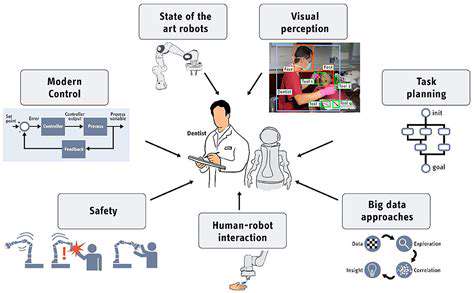

The Role of Human Oversight

While AI systems have the potential to automate many tasks and improve efficiency, human oversight remains crucial in ensuring fairness. Human intervention can be vital in identifying and rectifying biases that may arise in AI systems, as well as in addressing situations where the AI system makes decisions with undesirable outcomes. Ultimately, humans are responsible for the ethical and equitable implementation of AI.

Human expertise and judgment are essential for interpreting the outcomes of AI systems and ensuring that they are aligned with human values and societal goals. This partnership between humans and AI is critical for achieving a fair and equitable future.