The field of machine learning has witnessed groundbreaking innovations with the emergence of deep learning techniques. These advanced models are transforming how we approach feature engineering in data science projects. Rather than relying on time-consuming manual feature selection processes, contemporary deep learning systems can autonomously derive meaningful patterns directly from unstructured datasets. This paradigm shift represents a fundamental change in how we process complex information, particularly when working with datasets that defy traditional analytical approaches.

Modern automated feature extraction methods leverage deep learning architectures to uncover hidden relationships that might escape human analysts. This capability proves invaluable when handling multidimensional data structures where conventional techniques frequently underperform. The ability to process raw inputs without extensive preprocessing provides researchers with unprecedented analytical flexibility.

Data Representation and Preprocessing

Effective data preparation forms the foundation for successful feature extraction. Before feeding information into neural networks, careful consideration must be given to data formatting and cleaning procedures. Standard techniques include categorical encoding for discrete variables and various scaling methods for continuous measurements. These preparatory steps help mitigate common issues like feature dominance or distribution skew that could compromise model effectiveness.

Thoughtful data normalization often determines the success or failure of subsequent feature extraction. Addressing missing values appropriately and selecting suitable scaling methods can dramatically improve the quality of learned representations. Data scientists must balance computational efficiency with information preservation during these preliminary stages.

Neural Network Architectures for Feature Learning

The machine learning community has developed specialized network architectures tailored to different data types. Image processing applications frequently employ convolutional structures that excel at detecting spatial patterns. Sequential data analysis benefits from recurrent networks that capture temporal dependencies. The choice of architecture should align with both the data characteristics and the specific analytical objectives.

Multilayer neural networks demonstrate particular effectiveness for hierarchical feature discovery. Their capacity to identify and combine features at multiple abstraction levels enables sophisticated pattern recognition that simpler models cannot achieve. These deep architectures can automatically construct increasingly complex representations as data flows through successive network layers.

Feature Extraction and Selection

Post-training analysis involves harvesting the knowledge encoded in neural network activations. Intermediate layer outputs often contain distilled representations that serve as powerful features for downstream tasks. Subsequent pruning of these features helps eliminate redundancy and reduce computational overhead. This refinement process enhances model generalization while maintaining predictive accuracy.

Strategic feature curation represents a critical phase in the machine learning pipeline. Selecting the most informative subset of learned features can dramatically improve model performance and interpretability. Careful feature selection also contributes to more efficient resource utilization throughout the model lifecycle.

Evaluation Metrics and Model Tuning

Assessing feature engineering effectiveness requires appropriate performance indicators. Classification scenarios typically employ metrics like precision-recall curves, while regression analysis might utilize various error measurements. These quantitative assessments provide objective benchmarks for comparing different feature extraction approaches.

Comprehensive hyperparameter optimization remains essential for maximizing feature learning potential. Systematic experimentation with network configurations helps balance model complexity with generalization capability. Proper tuning prevents common pitfalls like excessive specialization to training data or failure to capture meaningful patterns.

Applications and Use Cases

Automated feature engineering finds applications across numerous technical domains. Natural language processing systems leverage these techniques to derive semantic representations from text corpora. Medical imaging platforms utilize learned features for diagnostic pattern recognition. The financial sector applies similar methods to detect subtle market indicators in complex datasets.

The expanding application landscape demonstrates the versatility of deep learning approaches. From industrial quality control to climate modeling, automated feature extraction continues to enable novel solutions to challenging analytical problems. This technology's adaptability ensures its relevance across diverse scientific and commercial contexts.

Limitations and Future Directions

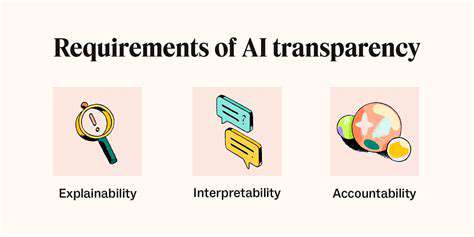

Despite significant advantages, current feature learning systems face several practical constraints. Computational resource requirements can prove prohibitive for some organizations, while model interpretability challenges persist. The black-box nature of many deep learning implementations sometimes hinders adoption in regulated industries.

Emerging research focuses on developing more transparent feature learning methodologies. Future advancements may yield hybrid approaches that combine deep learning's power with traditional methods' explainability. Efficiency improvements through novel architectures and training algorithms will likely address current computational limitations.

Contemporary educational frameworks increasingly integrate intelligent tutoring systems that dynamically adjust instructional content based on learner analytics. These adaptive platforms assess student progress through continuous evaluation, identify competency gaps, and personalize both curriculum sequencing and difficulty levels. This individualized approach to education demonstrates measurable improvements in knowledge retention and academic performance, while simultaneously reducing achievement disparities among diverse learner populations.