Data Collection: Ensuring Representation and Avoiding Bias

Data collection is a crucial first step in any AI project, and it's fundamental to mitigating bias. A dataset that accurately reflects the diversity of the population it's intended to serve is essential. Simply gathering data isn't enough; careful consideration must be given to the representativeness of the samples. This involves understanding the potential for inherent biases within the data sources, such as historical biases in demographics or socioeconomic indicators. Failure to account for these biases can perpetuate and even amplify existing societal prejudices. Careful planning and selection of data collection methods are vital to ensure a fair and unbiased outcome.

Furthermore, the methods used to collect data significantly impact the resulting dataset's quality. Consideration should be given to the potential for sampling bias, where certain groups are disproportionately represented or excluded. Strategies for addressing sampling bias, such as stratified sampling, can help ensure that the dataset is more representative. Employing diverse data collection methods, from surveys to observational studies, can also broaden the range of perspectives captured.

Data Cleaning: Removing Errors and Identifying Outliers

Data cleaning is an often-overlooked but critical aspect of bias mitigation. Inaccurate or incomplete data can skew the results and perpetuate harmful biases. Identifying and correcting errors, such as typos or inconsistencies in data entry, is a vital first step. Cleaning the data also involves handling missing values effectively, a common challenge in many datasets. Appropriate strategies for handling missing data, such as imputation or removal, need to be carefully considered and justified to avoid introducing further biases.

Identifying and addressing outliers is another crucial aspect of data cleaning. Outliers can significantly impact statistical models and lead to skewed results. Outliers might represent errors in data entry, but they can also represent valuable insights. Careful analysis of outliers, understanding their context, and determining whether to remove or adjust them is essential for maintaining data integrity and reducing bias in the final model.

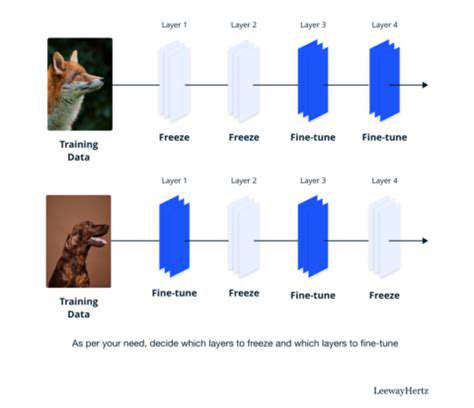

Feature Engineering: Transforming Data for Effective Modeling

Feature engineering involves transforming raw data into more meaningful features suitable for machine learning models. This process can significantly impact the model's performance and reduce the risk of bias. Feature selection, choosing the most relevant and informative variables, is crucial. Selecting features that are not correlated with protected attributes, like gender or race, can help mitigate bias. Careful consideration should be given to how different features interact, and how these interactions might contribute to bias.

Additionally, creating new features from existing ones can enhance the model's ability to detect patterns and relationships. This can involve combining existing features or applying transformations, such as logarithmic or polynomial transformations. These transformations can help capture non-linear relationships between variables, potentially reducing bias introduced by simple linear relationships.

Data Preprocessing Techniques: Standardization and Normalization

Standardization and normalization are essential data preprocessing techniques that can significantly impact model performance and mitigate bias. Standardization involves scaling the data to have a mean of zero and a standard deviation of one. Normalization, on the other hand, scales the data to a specific range, typically between 0 and 1. These techniques can help prevent features with larger values from dominating the model and potentially introducing bias.

These techniques are particularly important when dealing with datasets containing features with vastly different scales, which can disproportionately influence the model's outcome. Choosing the appropriate standardization or normalization technique is crucial and depends on the specific characteristics of the data and the model used.

Evaluating Bias in Preprocessed Data: Measuring and Addressing

Evaluating the preprocessed data for residual bias is critical to ensuring fairness in AI systems. This evaluation involves assessing the distribution of different groups within the data to identify any potential disparities. Techniques like comparing the representation of various groups in the training and testing sets can help identify biases. Using metrics like disparate impact analysis and demographic parity can help quantify the extent of bias and evaluate whether the preprocessing steps have been effective in mitigating it.

A thorough evaluation of the preprocessed data allows for identification of remaining biases. Addressing any identified biases requires iterative refinement of the preprocessing steps and potentially adjusting the model itself, ensuring that the model is fair and equitable across different groups. This ongoing evaluation process is fundamental to building responsible and unbiased AI systems.