Consider how human learning works - we don't start from zero with every new subject. Similarly, AI systems can build upon existing understanding to master new tasks more efficiently. This knowledge transfer dramatically reduces both computational costs and development time, making advanced AI applications more accessible.

Key Concepts in Transfer Learning

At the foundation of this approach lie two critical concepts. The source domain represents the original problem space where the model first gained its expertise. In contrast, the target domain denotes the new challenge where we want to apply this learned knowledge. The relationship between these domains determines the potential for successful knowledge transfer.

Not all knowledge transfers equally well. When domains share fundamental characteristics - like similar data structures or underlying patterns - the transfer tends to be more effective. However, significant mismatches can lead to poor performance, highlighting the need for careful domain analysis before implementation.

Pre-trained Models and their Importance

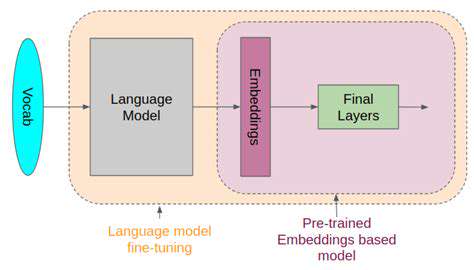

The real workhorses of transfer learning are pre-trained models. These sophisticated systems have already digested vast amounts of data, extracting complex patterns through extensive training on general tasks. For instance, models trained on millions of images develop an innate understanding of visual features that prove valuable across many computer vision applications.

These models serve as powerful starting points for specialized applications. Instead of requiring massive new datasets, developers can leverage these pre-built foundations and adapt them to specific needs. This approach has democratized AI development, making advanced capabilities available to organizations without massive data resources.

Fine-tuning and Adaptation

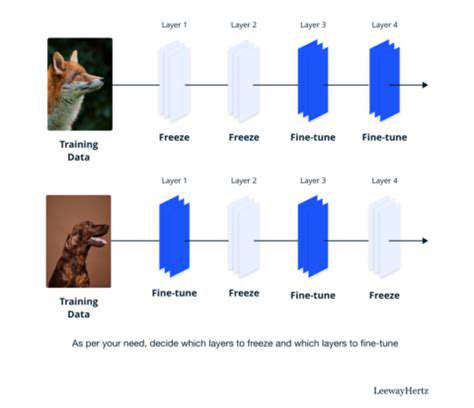

The adaptation process typically involves fine-tuning, where we carefully adjust the pre-trained model's parameters. Strategic decisions must be made about which layers to freeze (keeping their learned parameters unchanged) and which to retrain with new data. This balancing act preserves valuable existing knowledge while allowing the model to adapt to its new task.

Effective fine-tuning requires understanding both the model's architecture and the target problem's requirements. The goal is to find the sweet spot between preserving useful pre-learned features and developing task-specific capabilities.

Applications of Transfer Learning

The practical applications of this technology span numerous industries. In healthcare, it enables more accurate medical imaging analysis even with limited patient data. For language technologies, it powers more nuanced translation systems and sentiment analyzers. Computer vision systems benefit through improved object recognition across diverse environments.

What makes transfer learning truly remarkable is its versatility. From improving agricultural yield predictions to enhancing financial fraud detection, the potential applications continue to grow as the technology matures.

Challenges and Considerations

While powerful, the approach isn't without challenges. Negative transfer occurs when source domain knowledge actually hinders target task performance. This risk underscores the importance of thoroughly evaluating domain compatibility before attempting knowledge transfer.

Other considerations include determining the optimal amount of fine-tuning and managing computational resources during adaptation. Successful implementation requires both technical expertise and thoughtful planning to navigate these potential pitfalls.

Future Trends and Developments

As the field progresses, researchers are exploring increasingly sophisticated transfer mechanisms. Emerging techniques aim to handle more complex domain relationships and automate parts of the adaptation process. There's growing interest in multi-source transfer learning, where knowledge from several domains combines to tackle new challenges.

The next generation of transfer learning may fundamentally change how we develop AI systems, potentially enabling continuous learning across increasingly diverse applications. This evolution promises to make AI development both more efficient and more accessible in the coming years.