Accuracy and Reliability of AI-Generated Medical Content

Evaluating AI's Accuracy in Medical Content

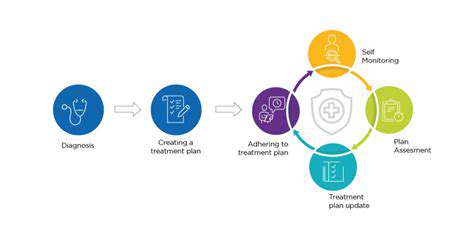

Assessing the accuracy of AI-generated medical content is paramount. Rigorous validation processes are crucial to ensure the information aligns with established medical knowledge and best practices. This involves comparing the AI's output against recognized medical databases, expert consensus, and clinical trials. Such verification steps are essential to prevent the dissemination of potentially harmful or misleading information, which could have serious consequences for patient care.

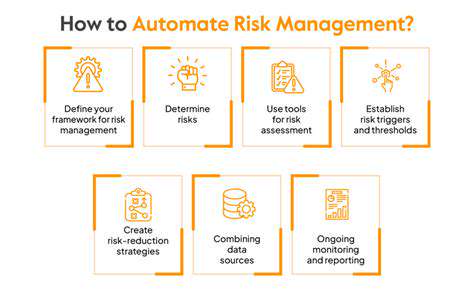

Furthermore, ongoing monitoring and refinement of the AI models are necessary to adapt to the ever-evolving landscape of medical research and advancements. This iterative process ensures that the AI's output remains up-to-date and reliable, reflecting the most current understanding of medical conditions and treatments.

Sources and Data Quality for AI Models

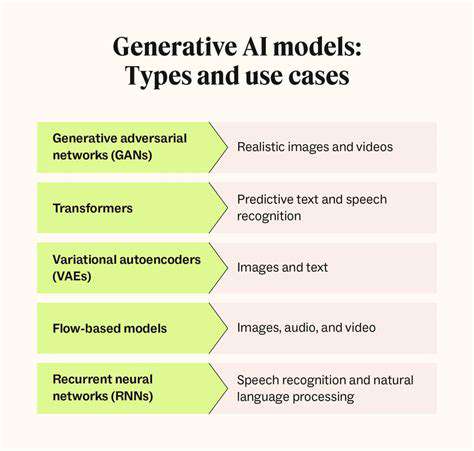

The reliability of AI-generated medical content hinges significantly on the quality and comprehensiveness of the data used to train the AI models. Biased or incomplete datasets can lead to inaccurate or misleading conclusions. AI systems must be trained on diverse and representative data sets to minimize potential biases and ensure the model generalizes effectively to various patient populations.

Careful curation and validation of these data sources are essential. This includes scrutinizing the origin of the data, verifying its accuracy, and identifying any potential limitations or biases that might affect the model's output.

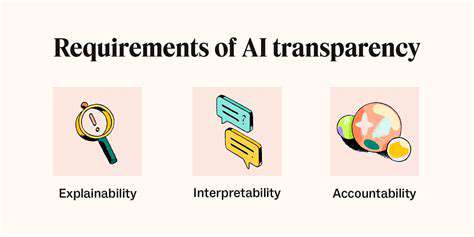

Transparency and Explainability of AI Outputs

Understanding how AI arrives at its conclusions is critical for medical applications. The more transparent the AI's decision-making process, the easier it is to identify potential errors or biases. Explainable AI (XAI) techniques are crucial for building trust and facilitating critical evaluation of the results.

Patients and clinicians need to understand the rationale behind the AI's recommendations. This transparency allows for a more informed discussion about the proposed treatment plans, and ultimately, enhances the quality of patient care.

Bias Mitigation in AI Medical Content Generation

AI models, like all systems, can inherit biases present in the data they are trained on. These biases can manifest in the AI's output, potentially leading to disparities in care for different patient populations. Careful consideration of potential biases throughout the development process is essential.

Techniques for identifying and mitigating bias in training data and model architecture need to be developed and implemented. This proactive approach is crucial for ensuring equitable and unbiased medical recommendations generated by AI.

Human Oversight and Validation in AI Systems

While AI can significantly augment medical content creation, human oversight and validation remain indispensable. Experts in the field should review and critically assess the AI's output to identify and correct any inaccuracies or potential errors.

Human oversight ensures that the AI's recommendations align with established medical guidelines, ethical considerations, and clinical best practices. This crucial step ensures the safety and efficacy of the information presented by the AI.

Future Directions and Ethical Considerations

The future of AI in medical content generation holds immense potential, but careful consideration of ethical implications is paramount. Ensuring responsible development and deployment are key to minimizing potential risks and maximizing benefits for patients and healthcare providers.

Continuous monitoring and evaluation of the AI systems' performance, along with transparent communication about their limitations, are essential for building public trust and fostering responsible innovation in this rapidly evolving field.