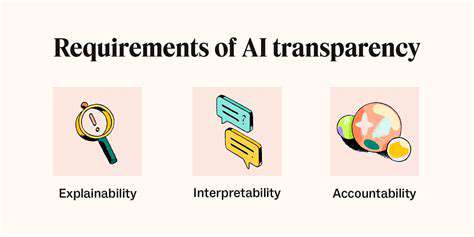

Ensuring Transparency and Explainability in AI Decisions

Establishing Clear Communication Channels

Effective transparency requires robust communication infrastructure. Comprehensive documentation, routine updates, and accessible contact points form the foundation of stakeholder trust. Genuine transparency involves not just information dissemination but active engagement with community concerns and questions.

Structured dialogue platforms serve as critical tools for maintaining open communication channels. These forums should facilitate meaningful exchange rather than one-way information transfer, ensuring all perspectives receive proper consideration.

Defining and Implementing Standards

Systematic documentation of data protocols ensures methodological consistency across all operations. These standards must remain accessible and comprehensible to diverse stakeholders, enabling independent verification of processes and outcomes. Regular auditing procedures maintain the relevance and effectiveness of these standards over time, identifying potential biases or areas requiring refinement.

Actively Seeking Independent Verification

External validation through third-party review significantly enhances system credibility. Objective assessment by impartial experts strengthens public confidence in algorithmic processes while identifying potential improvement areas. This practice demonstrates commitment to accountability beyond minimum requirements.

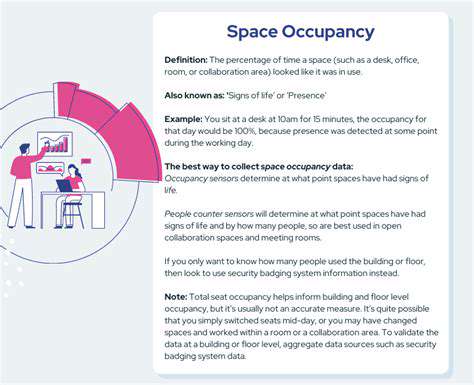

Promoting Data Accessibility and Usability

Effective transparency requires data presentation in multiple formats suitable for various audiences. Clear visualizations and explanatory materials help non-technical stakeholders understand complex information, while detailed datasets remain available for expert analysis. Providing interpretation resources ensures proper understanding across all user groups.

Addressing Concerns and Feedback Promptly

Responsive feedback mechanisms demonstrate genuine commitment to transparency. Documented resolution processes and timely responses to inquiries build lasting trust in organizational practices. Willingness to reconsider assumptions based on valid criticism strengthens institutional integrity over time.

Promoting Human Oversight and Control in AI-Driven Systems

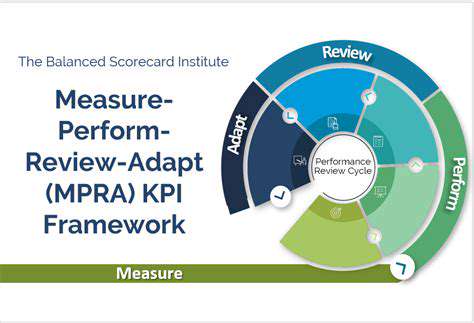

Ensuring Accountability in AI Systems

Human supervision remains essential for maintaining ethical AI implementation and preventing unintended consequences. This requires defined responsibility structures, human decision points in critical processes, and remediation pathways for system errors. Recognizing algorithmic limitations helps prevent over-reliance on automated systems.

Defining Clear Lines of Responsibility

Explicit assignment of roles and responsibilities creates necessary accountability structures. This clarity ensures identifiable parties for system outcomes, whether positive or negative, moving beyond purely technical development to incorporate ethical considerations throughout the AI lifecycle.

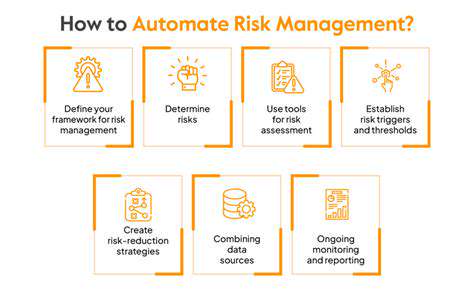

Establishing Human Intervention Points

Strategic integration of human judgment opportunities prevents complete automation of critical decisions. These intervention mechanisms ensure human values remain embedded in system operations, with clear protocols for when and how human oversight should occur.

Developing Ethical Frameworks and Guidelines

Comprehensive ethical standards must address emerging challenges in AI deployment. Regular framework updates ensure alignment with evolving societal expectations and technological capabilities, while accessible documentation promotes understanding across diverse stakeholder groups.

Promoting Transparency in AI Systems

Explainable AI processes enable meaningful human oversight. Comprehensive documentation of data sources, algorithms, and decision logic allows proper evaluation of system outputs, ensuring alignment with established ethical principles and organizational values.

Fostering Public Dialogue and Engagement

Inclusive community participation shapes responsible AI development. Diverse perspectives inform system design to better serve entire populations, with educational initiatives promoting broader understanding of AI's societal implications.

Facilitating Mechanisms for Redress

Accessible grievance procedures demonstrate commitment to accountability. Effective resolution systems build public confidence in AI applications, providing fair and timely responses to legitimate concerns about system impacts.