Introduction to Transfer Learning

What is Transfer Learning?

Transfer learning is a powerful machine learning technique that leverages knowledge gained from solving one problem to improve the performance of a model on a different, but related, problem. Instead of training a model from scratch on a large dataset, transfer learning utilizes a pre-trained model, which has already learned valuable features from a massive dataset, and adapts it to the specific task at hand. This approach significantly reduces the amount of data required for training and often leads to faster and more accurate results, particularly when dealing with limited data for the target task. This is especially crucial in computer vision, where acquiring large, labeled datasets can be expensive and time-consuming.

Imagine you want to train a model to identify different types of birds. Instead of starting from scratch with a dataset containing millions of bird images, transfer learning allows you to utilize a pre-trained model that has already learned to recognize various objects, like cars, cats, and dogs, from a massive image dataset. This pre-trained model already possesses a robust understanding of image features, which can be adapted to identify birds by fine-tuning specific layers of the model. This process significantly reduces the amount of labeled bird data needed for training, and allows you to achieve higher accuracy in a shorter period.

Transfer Learning in Computer Vision

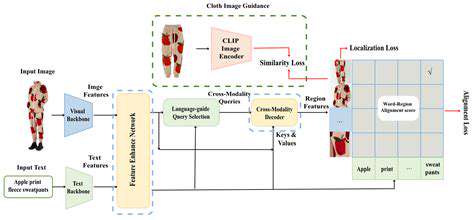

Computer vision applications, particularly object detection, often require massive datasets for training, which can be challenging and costly to acquire and label. Transfer learning significantly mitigates this challenge by leveraging pre-trained models trained on massive datasets like ImageNet. These models have already learned intricate features of images, and adapting them to specific object detection tasks is often more efficient and accurate than starting from scratch. The pre-trained models, such as those based on convolutional neural networks (CNNs), provide a solid foundation for tackling new tasks with limited data. This allows researchers and developers to focus on the specific nuances of the object detection task rather than the foundational aspects of image feature extraction.

The transfer learning approach is widely adopted in object detection tasks due to its ability to leverage the learned knowledge from general image recognition models. This allows developers to achieve high accuracy with substantially less training data, which is particularly advantageous in scenarios where collecting labeled data is difficult or expensive. By fine-tuning the pre-trained model on a smaller, specific dataset of object detection images, the model learns to identify the desired objects with greater accuracy and precision.

Applications of Transfer Learning in Object Detection

Transfer learning has found widespread use in various computer vision applications, especially in object detection tasks. One significant area of application is in medical imaging, where it enables the development of accurate and efficient models for detecting diseases like cancer from medical scans. By leveraging pre-trained models on large image datasets, researchers can create models that can rapidly and accurately analyze medical images, potentially leading to earlier disease diagnosis and improved patient outcomes. This is crucial because medical image analysis often relies on limited datasets of labeled images, making transfer learning a valuable tool.

Another application is in autonomous driving. Transfer learning enables the development of robust object detection models that can accurately identify pedestrians, vehicles, and other objects on the road. These models can be trained on large datasets of images and videos collected from various environments, allowing them to adapt and perform reliably in diverse situations. This significantly aids in the development of safe and reliable autonomous driving systems, which heavily rely on accurate object detection in real-time.

Challenges and Future Directions

Data Augmentation Strategies

Data augmentation is crucial for improving the robustness and generalizability of transfer learning models in computer vision. Limited training data can hinder performance, especially when fine-tuning pre-trained models. Techniques such as random cropping, flipping, and color jittering can significantly expand the dataset, effectively mitigating the impact of limited data. This process artificially creates variations in the training data, exposing the model to a broader range of object appearances and orientations, which leads to improved accuracy and generalization capabilities. Furthermore, advanced techniques like generative adversarial networks (GANs) can be employed to generate synthetic data, further boosting the model's ability to recognize objects in diverse scenarios.

Careful consideration of the specific characteristics of the target dataset is paramount. For instance, if the dataset predominantly features objects in specific lighting conditions, augmenting the data to include images with different lighting conditions can significantly enhance the model's adaptability.

Fine-tuning Strategies

Fine-tuning a pre-trained model is a critical step in transfer learning. Choosing the appropriate layers to fine-tune is essential for optimal performance. Freezing the lower layers, which are typically trained on a massive dataset, and fine-tuning only the higher layers that are responsible for recognizing the specific objects in the target domain, allows leveraging the knowledge learned from the source domain while adapting to the nuances of the target domain. This strategy is known as transfer learning and it allows for faster training and improved performance compared to training a model from scratch.

Experimentation with different learning rates and optimization algorithms is essential for achieving optimal results. Using a smaller learning rate during the fine-tuning process can help prevent the model from overfitting to the target dataset and maintain the learned knowledge from the source domain.

Computational Resources

Transfer learning, while offering significant advantages, often requires substantial computational resources. The training process, particularly when fine-tuning a pre-trained model, can be computationally intensive, demanding high-performance GPUs and potentially large amounts of RAM. Efficient implementation and optimization strategies are crucial for reducing the computational burden and enabling faster training times.

Cloud computing platforms and specialized hardware accelerators are becoming increasingly important for addressing the computational demands of transfer learning projects in computer vision. Utilizing such resources can help researchers and practitioners overcome the limitations imposed by local computing infrastructure.

Transfer Learning for Specialized Tasks

Transfer learning is not limited to general object detection; it can be effectively applied to specialized computer vision tasks. For instance, in medical imaging, pre-trained models can be fine-tuned for tasks such as identifying specific pathologies or assessing tissue damage. Similarly, in autonomous driving, transfer learning can be utilized to enhance the accuracy of object detection and recognition in various driving scenarios.

Furthermore, transfer learning can also be used for tasks like semantic segmentation, where the goal is to assign labels to individual pixels within an image, thereby enabling a more granular understanding of the scene.

Evaluating Model Performance

Evaluation metrics are critical for assessing the performance of transfer learning models in computer vision. Metrics like precision, recall, and F1-score are commonly used for evaluating object detection models. These metrics provide a quantitative measure of the model's ability to correctly identify and locate objects within images. Careful selection and application of appropriate evaluation metrics ensures a robust assessment of the model's performance across various aspects, such as accuracy, precision, and recall, enabling comprehensive analysis of the model's strengths and weaknesses.

Ethical Considerations

Transfer learning models, particularly those trained on large datasets, can inherit biases present in the source data. These biases can manifest in the model's predictions, potentially leading to unfair or discriminatory outcomes. Careful consideration of the potential biases embedded in the source data is crucial to mitigate these risks. Thorough analysis and mitigation strategies are essential to ensure fair and equitable model performance in real-world applications, particularly in areas like facial recognition or medical diagnosis. Addressing these ethical considerations is critical for responsible development and deployment of transfer learning models.

Generalization and Robustness

Generalization and robustness are paramount for the practical deployment of transfer learning models in computer vision. A model's ability to generalize to unseen data is crucial for ensuring reliable performance in diverse and real-world scenarios. Regularization techniques and careful selection of hyperparameters can help to improve the generalization capability of the model. Robustness to variations in lighting, occlusion, and viewpoint is equally vital. A model that consistently performs well across different variations of data will likely be more reliable and suitable for practical applications.