Introduction to AI in Neuro-oncology

What is AI?

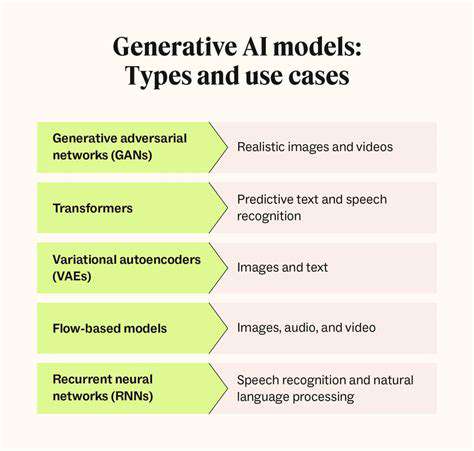

Artificial intelligence (AI) refers to technologies that allow machines to perform tasks typically requiring human intelligence. These include learning, reasoning, and decision-making. In neuro-oncology, AI applications range from diagnostic support to personalized treatment strategies.

The ability of AI systems to process and analyze data at scale often surpasses human capabilities in both speed and precision. By detecting subtle patterns in medical imaging and patient records that might escape human observation, AI contributes to more accurate diagnoses and better treatment results.

AI in Neuro-oncology Diagnosis

Machine learning models trained on extensive collections of brain scans (MRIs, CTs) can identify and categorize various brain tumor types. This technological advancement enables faster, more precise diagnoses, helping medical professionals choose optimal treatments.

Advanced imaging analysis powered by AI can reveal minute tissue abnormalities invisible to unaided human vision. Early tumor detection, particularly for small or hard-to-reach growths, becomes more achievable with these tools.

AI in Treatment Planning

Treatment customization benefits significantly from AI's capacity to analyze comprehensive patient profiles, including tumor specifics, genetic markers, and medical backgrounds. This analytical capability supports oncologists in selecting the most appropriate interventions.

Treatment response prediction models enable more informed clinical decisions, potentially yielding better patient outcomes. Personalized therapy approaches may reduce adverse effects while improving success rates.

AI in Drug Discovery

The drug development pipeline for neuro-oncology accelerates through AI's ability to sift through massive biological datasets. Potential therapeutic compounds can be identified more efficiently, shortening the traditionally lengthy discovery process.

AI in Predicting Patient Outcomes

Prognostic models analyze patient information to forecast disease progression and treatment efficacy. These predictions prove invaluable for adjusting therapeutic approaches throughout a patient's care journey.

Customized treatment strategies based on predictive analytics may enhance both survival rates and quality of life. This represents a significant advancement in precision medicine for brain tumor patients.

Ethical Considerations

Implementing AI in neuro-oncology practice introduces complex ethical questions regarding data security, potential algorithmic discrimination, and clinician accountability. Responsible development and deployment are essential to prevent harm or inequity.

Rigorous validation of AI diagnostic tools remains critical to avoid incorrect diagnoses or suboptimal treatment recommendations. Maintaining patient safety demands thorough testing and continuous monitoring of these systems.

Future Directions

Ongoing research aims to refine diagnostic precision, optimize treatment protocols, and expedite novel therapy development. Combining AI with complementary technologies like robotic surgery and nanomedicine promises further breakthroughs.

Continued progress in AI-driven personalized medicine will likely yield more targeted, effective neuro-oncology treatments. These advancements should translate to better clinical results and enhanced patient well-being.

Ethical Considerations and Future Directions

Transparency and Accountability

Clear documentation of data sources and analytical methods forms the foundation of ethical AI systems. All stakeholders should understand model training processes, input data characteristics, and decision logic. Even complex algorithms should provide interpretable explanations for their outputs to facilitate oversight.

Accountability frameworks must outline procedures for addressing system errors, biases, and adverse outcomes. Establishing responsibility for AI-related decisions helps ensure ethical implementation and provides recourse when problems occur. This includes defining accountability for both developers and deploying organizations.

Bias Mitigation and Fairness

Historical biases in training data can lead to discriminatory AI outputs. Combating this requires proactive measures during data collection, model development, and deployment monitoring. Continuous evaluation helps maintain equitable system performance.

Bias reduction extends beyond technical solutions to encompass broader societal considerations. Multidisciplinary collaboration involving technical experts, ethicists, and community representatives helps create fairer, more inclusive AI applications.

Data Privacy and Security

Safeguarding sensitive health information remains paramount in AI-driven medicine. Implementing robust cybersecurity measures prevents unauthorized data access while maintaining patient confidentiality. Privacy protection represents both a legal mandate and ethical imperative in medical AI applications.

Compliance with data protection regulations must be integrated throughout the AI development lifecycle. This includes obtaining proper consent, ensuring data minimization, and limiting information use to agreed purposes.

Explainability and Interpretability

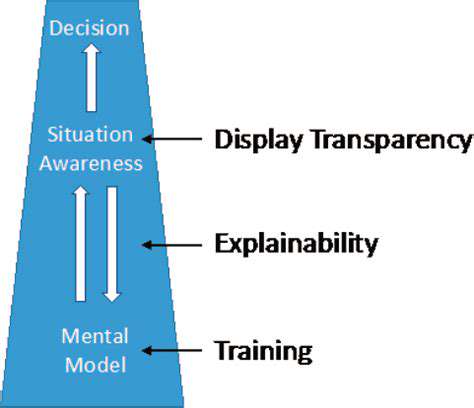

The opaque nature of complex AI models creates challenges for understanding their decision processes. Improving model interpretability helps identify potential flaws and builds user confidence in AI-assisted decisions. This transparency proves particularly important in high-stakes medical applications.

Ongoing research into explainable AI methods remains crucial for responsible implementation. Clinicians and patients alike benefit from understanding the reasoning behind AI-generated recommendations.

Societal Impact and Responsibility

Widespread AI adoption carries significant social implications across multiple domains. Proactive consideration of these effects helps maximize benefits while minimizing potential harms. Continuous dialogue among stakeholders helps navigate these complex issues.

Coordinated efforts between technologists, policymakers, and the public promote responsible AI development. Potential workforce impacts, economic consequences, and ethical dilemmas require careful examination before system deployment.

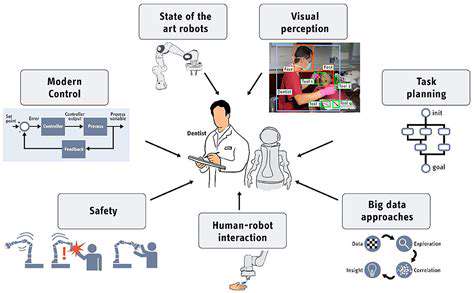

Human-Centered Design and Collaboration

AI systems should complement and enhance human capabilities rather than replace human judgment. Design approaches prioritizing human needs yield more ethical, beneficial technologies. This includes considering user experience and broader social contexts during development.

Cross-disciplinary cooperation ensures AI systems align with societal values and medical ethics. Ongoing engagement with diverse stakeholders promotes responsible innovation in neuro-oncology AI applications.