Protecting Patient Data in AI-Powered Healthcare

Data privacy and security are paramount in the context of AI-powered precision medicine. Robust protocols are essential to safeguard sensitive patient information throughout the entire process, from data collection and storage to analysis and application. This includes encrypting data both in transit and at rest, implementing access controls to limit who can view specific data, and adhering to stringent regulations like HIPAA and GDPR. Failure to prioritize these measures can lead to significant breaches, compromising patient trust and potentially causing irreparable harm.

The Role of Encryption in Data Protection

Data encryption plays a crucial role in safeguarding patient information. By converting sensitive data into an unreadable format, encryption makes it virtually impossible for unauthorized individuals to access and interpret the information. Different encryption methods, such as symmetric and asymmetric encryption, offer varying levels of security, and the choice of method should be carefully considered based on the specific data being protected. A multi-layered encryption approach, combining different methods, can further enhance security measures.

Ensuring Data Integrity and Confidentiality

Maintaining the integrity and confidentiality of patient data is critical. Data integrity refers to the accuracy and reliability of the information, ensuring that it is complete and free from errors or tampering. Confidentiality, on the other hand, ensures that only authorized individuals have access to the data. Implementing robust data validation and auditing procedures is essential to maintain both integrity and confidentiality, helping to detect and prevent unauthorized access or modifications.

Compliance with Privacy Regulations

Adherence to relevant data privacy regulations, such as HIPAA in the US and GDPR in Europe, is mandatory for any organization handling patient data in the context of AI. These regulations outline specific requirements for data collection, storage, processing, and sharing. Non-compliance can result in significant penalties and reputational damage. Organizations must carefully assess their data handling practices and ensure they align with these regulations to maintain legal compliance and patient trust.

Data Minimization and Purpose Limitation

Implementing data minimization and purpose limitation principles is essential to ensure that only the necessary data is collected and used for a specific, predefined purpose. Collecting excessive or unnecessary data increases the risk of breaches and compromises patient privacy. Restricting data use to its intended purpose minimizes the potential for misuse and enhances patient control over their information.

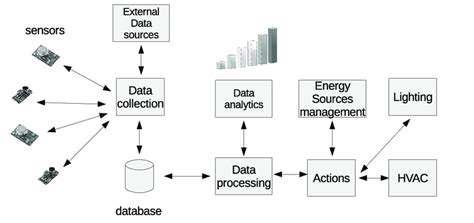

Secure Data Storage and Management Systems

Robust and secure data storage and management systems are crucial to safeguard sensitive patient information. These systems should employ advanced security measures, such as multi-factor authentication, regular security audits, and physical access controls. Regular updates and maintenance are essential to ensure that the systems remain up-to-date with the latest security protocols and prevent vulnerabilities. Regular backups and disaster recovery plans are equally important to protect against data loss due to unforeseen circumstances.

Ethical Considerations in AI-Driven Healthcare

Ethical considerations surrounding data privacy and security in AI-driven precision medicine are multifaceted. These considerations encompass issues such as informed consent, patient autonomy, data bias, and potential discrimination. Developing ethical guidelines and frameworks for data use in AI applications is crucial to ensure responsible development and implementation of these technologies, safeguarding patient rights and promoting equitable access to care. Maintaining transparency in data usage and decision-making processes is also critical.

Bias in AI Algorithms: Achieving Fairness and Equity

Understanding the Root Causes of Bias

AI algorithms, trained on vast datasets, can inadvertently inherit and amplify existing societal biases. These biases often stem from the data itself, reflecting historical inequalities and prejudices present within the world. For example, if a dataset predominantly portrays men in leadership roles, an AI trained on that data might perpetuate gender stereotypes in its decision-making. This isn't malicious intent, but rather a reflection of the flawed input. Addressing this requires careful consideration of the data sources and the potential for bias they carry. Furthermore, the algorithms themselves may contain hidden biases due to their design and implementation, further exacerbating the problem.

Another crucial factor is the lack of diversity in the development teams themselves. If the individuals building and training AI models come from a homogenous background, their perspectives and experiences may not adequately capture the nuances of different populations. This can lead to algorithms making unfair or inaccurate predictions for underrepresented groups. Recognizing and actively addressing these systemic issues is paramount to building more just and equitable AI systems. This includes encouraging diverse representation in the field and promoting a culture of inclusivity.

Mitigating Bias in AI Algorithm Design

Developing strategies to mitigate bias is a critical step in ensuring fairness and accuracy in AI applications. One approach involves the use of diverse and representative datasets, ensuring that the training data reflects the true diversity of the population. This requires an active effort to gather data from various sources and perspectives, including underrepresented communities. This process should also include ongoing validation and monitoring to ensure that the algorithms continue to perform fairly across all groups.

Techniques like fairness-aware machine learning algorithms can be employed to directly address potential biases. These algorithms aim to explicitly consider fairness metrics during the training process, minimizing the risk of discrimination. Furthermore, rigorous testing and evaluation protocols are necessary to identify and quantify potential biases in algorithms. Continuous monitoring of AI systems in real-world deployments is equally important to detect and adapt to emerging biases over time. This iterative process of testing, evaluation, and adjustment is essential for maintaining the integrity and fairness of AI systems.

Ensuring transparency in the design and implementation of AI algorithms is another crucial aspect. Understanding how an algorithm arrives at its decisions is essential for identifying potential biases and developing countermeasures. This transparency fosters trust and accountability, allowing stakeholders to scrutinize the decision-making process and ensure equitable outcomes.

Nutrition experts are increasingly recognized as vital guides in navigating the complex world of healthy eating. Their insights extend far beyond basic dietary recommendations, delving into the intricate interplay between food, health, and well-being. By understanding the science behind nutrition, we can make more informed choices about the foods we consume, ultimately leading to a healthier and more vibrant life. These experts often highlight the importance of personalized nutrition plans, recognizing that individual needs and goals vary greatly.

Accountability and Responsibility: Navigating the Complexities of AI Errors

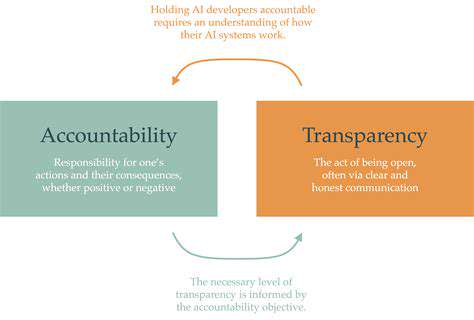

Accountability in Action

Accountability isn't just a buzzword; it's a crucial element in any successful endeavor. It's about taking ownership of your actions and their consequences, both positive and negative. This involves recognizing your role in a situation, understanding your responsibilities, and proactively working towards solutions, even when faced with challenges. It fosters trust and encourages a collaborative environment.

In professional settings, accountability translates into meeting deadlines, fulfilling commitments, and taking responsibility for mistakes. This proactive approach to error management demonstrates a commitment to continuous improvement and a willingness to learn from setbacks.

Defining Responsibility

Responsibility, often intertwined with accountability, goes beyond simply acknowledging actions. It's about understanding the wider implications of your choices and the impact they have on others. A sense of responsibility often involves anticipating potential problems and taking preventative measures. It's about considering the what ifs and planning accordingly to mitigate risks.

Taking responsibility also involves being reliable and consistent in your actions. It's about demonstrating integrity and upholding ethical standards in all your interactions.

The Importance of Transparency

Transparency is fundamentally linked to both accountability and responsibility. When individuals and organizations operate with transparency, they are open about their actions, decisions, and processes. This fosters trust and allows stakeholders to understand the motivations behind choices. Open communication and honesty are vital components of a transparent system.

Transparency also enables effective feedback loops, allowing for continuous improvement and adaptation to changing circumstances. It empowers individuals to understand their role within the larger picture and encourages a culture of shared responsibility.

Building a Culture of Accountability

Creating a culture of accountability requires a multi-faceted approach. Leaders play a critical role in setting the tone and expectations for responsible behavior. Clear communication of roles, responsibilities, and expectations is paramount. This includes establishing clear guidelines and procedures to ensure everyone understands their part in the overall process.

Consequences and Learning

Accountability and responsibility are not just about avoiding mistakes; they are also about learning from them. Consequences, whether positive or negative, are crucial learning opportunities. Understanding the impact of actions allows individuals and organizations to adjust strategies, improve processes, and ultimately become more effective. Constructive feedback and a willingness to adapt are essential elements of this learning cycle.

By acknowledging both successes and failures, and focusing on continuous improvement, accountability and responsibility drive progress and growth.