Defining Ethical Considerations in AI-Powered Learning Environments

Defining Ethical Frameworks

When examining ethics in technology, we find that responsible innovation demands careful thought. Various philosophical approaches, like utilitarianism's focus on collective good or deontology's rule-based morality, provide lenses to assess our choices. What makes ethical decision-making complex is that these frameworks often disagree in practice, requiring practitioners to weigh context carefully before acting.

Educators implementing AI tools face particularly nuanced challenges. The classroom environment introduces multiple stakeholders - students, parents, and institutions - each with competing priorities. A teacher might struggle when an AI recommendation system suggests personalized learning paths that conflict with standardized curriculum requirements, creating tension between individualization and uniformity.

Addressing Potential Conflicts of Interest

In educational technology, conflicts emerge in unexpected ways. Consider a school administrator evaluating AI grading systems while their spouse works for a potential vendor. Even unconscious biases in such situations can skew decisions toward particular solutions. Many institutions now mandate annual conflict disclosures, but the most ethical organizations go further by establishing independent review committees for technology procurement.

Case studies from university adoption of proctoring software reveal how financial incentives can override pedagogical concerns. When commission structures influence sales of surveillance tools to educators, the resulting implementations often prioritize institutional convenience over student privacy. Transparency alone isn't enough - we need structural safeguards separating sales processes from educational decision-making.

Balancing Competing Values

The tension between accessibility and rigor illustrates typical value conflicts in AI education tools. An adaptive learning platform might increase engagement for struggling students while potentially reducing challenge for advanced learners. Research from blended learning environments shows that striking this balance requires continuous adjustment rather than one-time solutions.

Environmental concerns add another layer of complexity. While cloud-based AI systems enable remote learning, their energy consumption creates sustainability dilemmas. Some progressive districts now evaluate edtech proposals through dual lenses of educational impact and carbon footprint, recognizing that true ethical practice means considering both immediate learning outcomes and long-term planetary consequences.

Mitigating Algorithmic Bias in Educational AI

Understanding Algorithmic Bias in Educational AI

Bias in learning algorithms often reflects historical inequities rather than intentional discrimination. For instance, speech recognition tools trained primarily on native English speakers may misinterpret accents, disadvantaging multilingual learners. The most pernicious biases are those that replicate existing achievement gaps under the guise of technological neutrality.

Recent studies of college admission algorithms revealed how proxy variables - like zip codes or extracurricular participation - can inadvertently encode socioeconomic status. When these factors influence recommendations, they may systematically disadvantage first-generation applicants. What appears as meritocratic automation often hides deeply embedded structural biases.

Data Collection and Preprocessing for Fairness

Effective bias mitigation starts before model training begins. Schools collecting data for adaptive learning systems must ensure representation across gender, race, language proficiency, and learning differences. One urban district achieved this by partnering with special education teachers to include students with IEPs in their training datasets, resulting in more inclusive recommendations.

Normalization techniques prove particularly important for assessment algorithms. When standardized test scores from different regions vary substantially in mean performance, simple score comparisons become meaningless. Contextual normalization that accounts for regional educational resources creates fairer benchmarks while still maintaining rigorous standards.

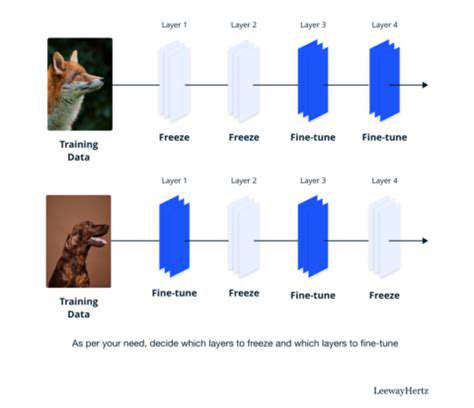

Developing Fair and Equitable AI Models

Innovative approaches like adversarial debiasing show promise in educational applications. These techniques actively punish models for making predictions correlated with protected attributes. In one experiment, this method reduced gender disparities in STEM course recommendations by 40% without sacrificing prediction accuracy.

The most successful implementations combine technical solutions with human oversight. A Midwestern university's hybrid system flags potentially biased recommendations for counselor review, creating a human-in-the-loop safeguard. This approach maintains efficiency while adding crucial ethical oversight.

Ensuring Data Privacy and Security in Educational AI Applications

Data Minimization and Purpose Limitation

Modern student data practices often collect more information than necessary, creating unnecessary risk. A California district reduced their data collection by 60% after realizing they were storing decades-old records with no legal or educational purpose. Their revised policy automatically purges inactive records after two years unless specifically retained for legal requirements.

Purpose limitation becomes especially critical with behavioral analytics. While tracking engagement patterns might improve course design, using the same data for disciplinary purposes creates ethical concerns. Clear data use policies should specify exact purposes and prohibit secondary uses without additional consent.

Robust Access Controls and Authentication

Role-based access systems in schools require fine-grained permission structures. A teacher might need access to their current students' performance data but not historical records or colleagues' classes. Implementing attribute-based access control (ABAC) allows for dynamic permissions based on multiple contextual factors like time of day or network location.

Multi-factor authentication presents unique challenges in K-12 environments where students may not have personal devices. One innovative solution uses physical security keys stored in classrooms, combined with biometric authentication on shared devices. This approach maintains security while accommodating young users without smartphones.

Data Breach Preparedness and Response

Educational institutions often underestimate their attractiveness as cyber targets. A 2023 study found that schools experience data breaches at twice the rate of similarly sized businesses. Regular tabletop exercises simulating ransomware attacks help IT teams identify gaps in their response plans, particularly in communication protocols with parents.

The most effective response plans designate specific spokespeople and pre-approved messaging templates. When a breach occurs, delayed or inconsistent communications often cause more reputational damage than the breach itself. Preparing these materials in advance ensures timely, accurate information reaches affected parties.