Defining Responsible AI in Educational Contexts

Responsible AI in education requires balancing technological innovation with ethical rigor. Rather than just deploying AI tools in classrooms, we must ensure they foster equal access, protect student privacy, and counteract systemic biases. Human oversight remains indispensable to assess societal impacts and guide ethical implementation.

Transparency is non-negotiable. When students and teachers comprehend how AI-driven decisions are made, they can collaboratively refine these systems. This openness cultivates trust and ensures AI serves as a supportive partner rather than an opaque authority in learning environments.

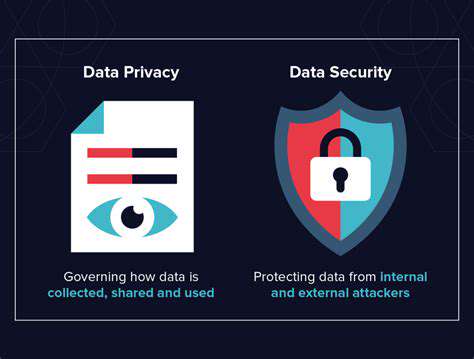

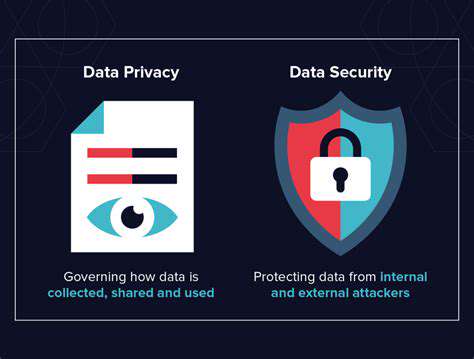

Data Privacy and Security in AI-Powered Learning

With AI systems processing sensitive student data—from grades to behavioral patterns—robust encryption and anonymization are mandatory safeguards. Institutions must establish clear data governance policies while empowering families with rights to access, modify, or erase their information.

Consent processes should be explicit and jargon-free. Parents deserve straightforward explanations about data usage risks and benefits, as transparency directly correlates with community trust in educational technology.

Bias Mitigation and Fairness in AI Algorithms

Since AI inherits biases from its training data, education applications risk amplifying discrimination. For instance, an algorithm trained on predominantly urban school data might misinterpret rural students' performance patterns. Proactive measures like bias-aware dataset curation and algorithmic audits are essential to prevent such scenarios.

Continuous monitoring is equally critical. Regular third-party evaluations can detect emerging disparities, ensuring AI systems adapt to evolving student demographics and learning methodologies.

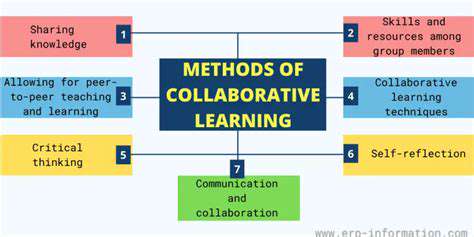

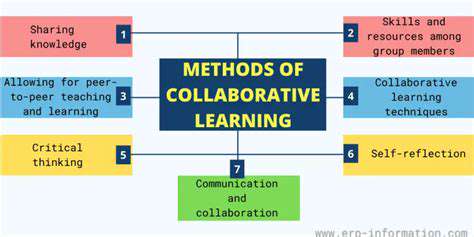

Human Oversight and Intervention in AI-Driven Education

AI should never replace educators but rather augment their expertise. Teachers must retain agency to override algorithmic recommendations when necessary. Professional development programs should equip educators with skills to critically assess AI outputs and integrate them meaningfully into pedagogy.

For example, when an AI tutoring system persistently recommends remedial content to a struggling student, a perceptive teacher might recognize the need for alternative motivational strategies rather than repetitive drills.

Accessibility and Inclusivity for All Learners

Effective AI tools must accommodate diverse needs—from screen readers for visually impaired students to multilingual interfaces for ESL learners. Universal design principles should guide development, with customization options allowing personalization without compromising core functionality.

Avoiding one-size-fits-all solutions is particularly crucial. An AI writing assistant that only recognizes formal academic English might inadvertently penalize students from dialect-rich cultural backgrounds.

Addressing Bias and Fairness in AI-Powered Learning Systems

Understanding the Scope of AI Bias

AI systems mirror the imperfections of their training data. Historical admissions data favoring certain demographics, for instance, could lead recommendation algorithms to perpetuate exclusionary practices. Recognizing these patterns enables targeted corrective measures.

Data Collection and Preprocessing Techniques

Curating datasets that reflect true demographic diversity is foundational. When gathering student interaction data, researchers should deliberately include underrepresented groups and document sampling methodologies for accountability.

Algorithmic Design and Evaluation Metrics

Developers must prioritize fairness metrics during model training. Techniques like counterfactual fairness testing—where outcomes are compared across hypothetical demographic variations—help identify hidden discriminatory patterns before deployment.

Explainable AI (XAI) and Transparency

When an AI flags a student as at-risk, XAI methods should reveal whether the determination stemmed from genuine performance indicators or potentially biased proxies like socioeconomic markers.

Continuous Monitoring and Evaluation

Institutions should establish bias response teams to regularly assess AI tools using real-world performance data. For example, if an adaptive learning platform shows significant outcome disparities between genders across multiple semesters, immediate recalibration becomes necessary.