Understanding Data Bias

Data bias is a pervasive issue in machine learning, stemming from the inherent characteristics of the data used to train algorithms. This bias, often reflecting societal prejudices or historical inaccuracies, can manifest in subtle yet significant ways. For example, if a dataset used to train a facial recognition system predominantly features images of light-skinned individuals, the algorithm may perform poorly when presented with images of darker-skinned individuals. This isn't a failure of the algorithm itself, but a direct consequence of the biased data it was trained on.

Recognizing that data bias is not simply a technical problem, but a social one, is crucial. Addressing it requires a multi-faceted approach that goes beyond simply improving the algorithm's technical performance.

Types of Data Bias

Data bias can manifest in various forms. Sampling bias occurs when the training data doesn't represent the real-world population accurately, leading to skewed results. Measurement bias arises from flawed data collection methods, potentially introducing inaccuracies or inconsistencies. Furthermore, inherent bias can be embedded within the data itself, reflecting existing societal prejudices and inequalities, a particularly insidious form that requires careful consideration.

Understanding the different types of bias is essential for identifying and mitigating their impact on machine learning models.

The Impact of Data Bias

The consequences of data bias in machine learning can be far-reaching and impactful. Biased algorithms can perpetuate and even amplify existing societal inequalities, leading to unfair or discriminatory outcomes. For instance, a biased loan application system could deny loans to individuals from certain demographics, exacerbating economic disparities. Moreover, biased algorithms used in criminal justice systems could lead to wrongful arrests or sentencing, highlighting the grave ethical implications of this issue.

Mitigating Data Bias

Addressing data bias requires a proactive and systematic approach. Data collection methods should prioritize diversity and inclusivity to ensure the representation of various groups and perspectives. Data cleaning and preprocessing techniques can help to identify and correct errors or inconsistencies. Furthermore, algorithmic auditing and evaluation can detect and address potential biases in the model's output.

Ongoing monitoring and evaluation are essential to ensure that biases are not reintroduced as the data evolves over time.

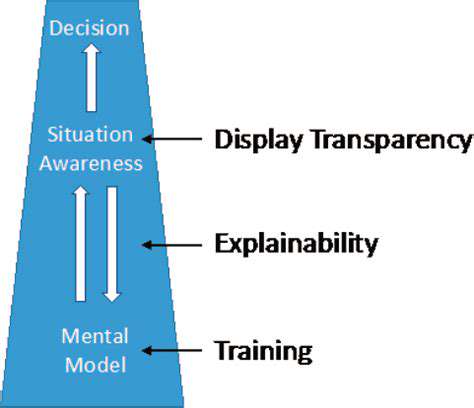

Algorithmic Transparency and Explainability

Transparency and explainability in algorithms are crucial for understanding how they arrive at their decisions. Understanding the reasoning behind an algorithm's output allows for identifying potential biases and enabling corrective measures. This requires developing methods to make the decision-making processes of algorithms more transparent and understandable, going beyond simple black-box predictions.

Ethical Considerations in Data Collection

Ethical considerations are paramount in data collection practices. Data collection should prioritize privacy and informed consent, particularly when dealing with sensitive information. Data should be collected responsibly and ethically, with due consideration for the potential impact on individuals and communities. Moreover, data anonymization and de-identification techniques can help to protect individuals' privacy while still enabling the use of valuable data for training machine learning models.

The Role of Human Oversight

Ultimately, human oversight is essential in the development and deployment of machine learning models. Human experts can play a critical role in identifying and mitigating biases in the data and algorithms, ensuring that they are used responsibly and ethically. This involves ongoing evaluation and scrutiny of the models' outputs and considering the potential societal impacts of their use.

Continuous monitoring and adaptation are crucial to ensure that machine learning systems remain fair and equitable over time.